MediaPipe是用於構建跨平臺多模態應用ML管道的框架,其包括快速ML推理,經典計算機視覺和媒體內容處理(如視頻解碼)。下面是用於對象檢測與追蹤的MediaPipe示例圖,它由4個計算節點組成:PacketResampler計算器;先前發佈的ObjectDetection子圖;圍繞上述BoxTrakcing子圖的ObjectTracking子圖;以及繪製可視化效果的Renderer子圖。

ObjectDetection子圖僅在請求時運行,例如以任意幀速率或由特定信號觸發。更具體地講,在將視頻幀傳遞到ObjectDetection之前,本示例中的PacketResampler將它們暫時採樣爲0.5 fps。你可以在PacketResampler中將這一選項配置爲不同的幀速率。正是因爲如此,在識別的時候可以時間抖動更少,而且可以跨幀維護對象ID。

Mediapipe開源地址:https://github.com/google/mediapipe

第一步 安裝Mediapipe框架¶

安裝依賴環境。

sudo apt-get update && sudo apt-get install -y build-essential git python zip adb openjdk-8-jdk

安裝bazel編譯環境,因爲是使用bazel編譯Mediapipe的。

curl -sLO --retry 5 --retry-max-time 10 \

https://storage.googleapis.com/bazel/2.0.0/release/bazel-2.0.0-installer-linux-x86_64.sh && \

sudo mkdir -p /usr/local/bazel/2.0.0 && \

chmod 755 bazel-2.0.0-installer-linux-x86_64.sh && \

sudo ./bazel-2.0.0-installer-linux-x86_64.sh --prefix=/usr/local/bazel/2.0.0 && \

source /usr/local/bazel/2.0.0/lib/bazel/bin/bazel-complete.bash

/usr/local/bazel/2.0.0/lib/bazel/bin/bazel version && \

alias bazel='/usr/local/bazel/2.0.0/lib/bazel/bin/bazel'

安裝adb命令,同時windows也要安裝相同版本的adb命令。Windows下安裝對應版本的adb,下載鏈接:https://dl.google.com/android/repository/platform-tools_r26.0.1-windows.zip

sudo apt-get install android-tools-adb

adb version

# Android Debug Bridge version 1.0.39

克隆Mediapipe源碼。

git clone https://github.com/google/mediapipe.git

cd mediapipe

安裝OpenCV環境,執行以下命令即可完成安裝。

sudo apt-get install libopencv-core-dev libopencv-highgui-dev \

libopencv-calib3d-dev libopencv-features2d-dev \

libopencv-imgproc-dev libopencv-video-dev

執行一下命令,測試環境是否安裝成功。

export GLOG_logtostderr=1

bazel run --define MEDIAPIPE_DISABLE_GPU=1 \

mediapipe/examples/desktop/hello_world:hello_world

如果環境安裝成功,會輸出一下信息:

I20200707 09:21:50.275205 16138 hello_world.cc:56] Hello World!

I20200707 09:21:50.276554 16138 hello_world.cc:56] Hello World!

I20200707 09:21:50.276665 16138 hello_world.cc:56] Hello World!

I20200707 09:21:50.276768 16138 hello_world.cc:56] Hello World!

I20200707 09:21:50.276887 16138 hello_world.cc:56] Hello World!

I20200707 09:21:50.277523 16138 hello_world.cc:56] Hello World!

I20200707 09:21:50.278563 16138 hello_world.cc:56] Hello World!

I20200707 09:21:50.279263 16138 hello_world.cc:56] Hello World!

I20200707 09:21:50.279850 16138 hello_world.cc:56] Hello World!

I20200707 09:21:50.280354 16138 hello_world.cc:56] Hello World!

第二步 編譯 MediaPipe 的 Android aar 包¶

在mediapipe根目錄下執行以下腳本安裝Android的SDK和NDK,在安裝的過程中需要同意協議才能繼續安裝,所以出現協議時,輸入y繼續安裝SDK和NDK。執行完腳本之後,最好確認一下SDK和NDK有沒有下載到對應的目錄了。

chmod +x ./setup_android_sdk_and_ndk.sh

bash ./setup_android_sdk_and_ndk.sh ~/Android/Sdk ~/Android/Ndk r18b

一般不會出現,除非是在windows下執行git clone操作。但是如果出現$'\r': command not found錯誤,執行一下操作。

vim setup_android_sdk_and_ndk.sh

:set ff=unix

:wq

添加SDK和NDK的環境變量,根據上面執行腳本時輸入的參數,SDK和NDK的目錄如下,vim ~/.bashrc,輸入下添加環境變量,變量地址下面已說明,最好執行source ~/.bashrc命令,配置生效。

export ANDROID_HOME=$PATH:/home/test/Android/Sdk

export ANDROID_NDK_HOME=$PATH:/home/test/Android/Ndk/android-ndk-r18b

# 地址如下

# export ANDROID_HOME=$PATH:/home/用戶名/Android/Sdk

# export ANDROID_NDK_HOME=$PATH:/home/用戶名/Android/Ndk/android-ndk-r18b

創建Mediapipe生成Android aar的編譯文件,命令如下。

cd mediapipe/examples/android/src/java/com/google/mediapipe/apps/

mkdir buid_aar && cd buid_aar

vim BUILD

編譯文件BUILD中內容如下,name是生成後aar的名字,calculators爲使用的模型和計算單元,其他的模型和支持計算單元可以查看 mediapipe/graphs/目錄下的內容,在這個目錄都是Mediapipe支持的模型。其中目錄 hand_tracking就是使用到的模型,支持的計算單元需要查看該目錄下的BUILD文件中的 cc_library,這裏我們是要部署到Android端的,所以選擇Mobile的計算單元。本教程我們使用mobile_calculators,這個只檢測一個手的關鍵點,如何想要檢查多個收修改成這個計算單元multi_hand_mobile_calculators。

load("//mediapipe/java/com/google/mediapipe:mediapipe_aar.bzl", "mediapipe_aar")

mediapipe_aar(

name = "mediapipe_hand_tracking",

calculators = ["//mediapipe/graphs/hand_tracking:mobile_calculators"],

)

回到 mediapipe根目錄,執行以下命令生成Android的aar文件。執行成功,會生成該文件 bazel-bin/mediapipe/examples/android/src/java/com/google/mediapipe/apps/buid_aar/mediapipe_hand_tracking.aar。

chmod -R 755 mediapipe/

bazel build -c opt --fat_apk_cpu=arm64-v8a,armeabi-v7a \

//mediapipe/examples/android/src/java/com/google/mediapipe/apps/buid_aar:mediapipe_hand_tracking

執行以下命令生成Mediapipe的二進制圖,命令參數同樣是上面的BUILD中,其中路徑不變,變的是路徑後面的參數。這次我們需要尋找的是 mediapipe_binary_graph中的 name,根據我們所要使用的模型,同樣這個也是隻檢測單個手的關鍵點,多個手的使用multi_hand_tracking_mobile_gpu_binary_graph。選擇對應的 name。成功之後會生成 bazel-bin/mediapipe/graphs/hand_tracking/hand_tracking_mobile_gpu.binarypb。

bazel build -c opt mediapipe/graphs/hand_tracking:hand_tracking_mobile_gpu_binary_graph

第三步 構建Android項目¶

1、在Android Studio中創建一個TestMediaPipe的空白項目。

2、複製上一步編譯生成的aar文件到app/libs/目錄下,該文件在mediapipe根目錄下的以下路徑:

bazel-bin/mediapipe/examples/android/src/java/com/google/mediapipe/apps/buid_aar/mediapipe_hand_tracking.aar

3、複製以下文件到app/src/main/assets/目錄下。

bazel-bin/mediapipe/graphs/hand_tracking/hand_tracking_mobile_gpu.binarypb

mediapipe/models:handedness.txt

mediapipe/models/hand_landmark.tflite

mediapipe/models/palm_detection.tflite

mediapipe/models/palm_detection_labelmap.txt

4,下載OpenCV SDK,下載地址如下,解壓之後,把OpenCV-android-sdk/sdk/native/libs/目錄下的arm64-v8a和armeabi-v7a複製到Android項目的app/src/main/jniLibs/目錄下。

https://github.com/opencv/opencv/releases/download/3.4.3/opencv-3.4.3-android-sdk.zip

5、在app/build.gradle添加以下依賴庫,除了添加新的依賴庫,還有在第一行添加'*.aar',這樣才能通過編譯。還需要指定項目使用的Java版本爲1.8。

dependencies {

implementation fileTree(dir: "libs", include: ["*.jar", '*.aar'])

implementation 'androidx.appcompat:appcompat:1.1.0'

implementation 'androidx.constraintlayout:constraintlayout:1.1.3'

testImplementation 'junit:junit:4.13'

androidTestImplementation 'androidx.test.ext:junit:1.1.1'

androidTestImplementation 'androidx.test.espresso:espresso-core:3.2.0'

// MediaPipe deps

implementation 'com.google.flogger:flogger:0.3.1'

implementation 'com.google.flogger:flogger-system-backend:0.3.1'

implementation 'com.google.code.findbugs:jsr305:3.0.2'

implementation 'com.google.guava:guava:27.0.1-android'

implementation 'com.google.guava:guava:27.0.1-android'

implementation 'com.google.protobuf:protobuf-java:3.11.4'

// CameraX core library

implementation "androidx.camera:camera-core:1.0.0-alpha06"

implementation "androidx.camera:camera-camera2:1.0.0-alpha06"

}

// android 中添加

compileOptions {

targetCompatibility = 1.8

sourceCompatibility = 1.8

}

6、在配置文件AndroidManifest.xml中添加相機權限。

<!-- For using the camera -->

<uses-permission android:name="android.permission.CAMERA" />

<uses-feature android:name="android.hardware.camera" />

<uses-feature android:name="android.hardware.camera.autofocus" />

<!-- For MediaPipe -->

<uses-feature android:glEsVersion="0x00020000" android:required="true" />

7、修改頁面代碼和邏輯代碼,MainActivity.java和activity_main.xml代碼如下。

以下爲activity_main.xml代碼,結構很簡單,就一個FrameLayout包裹TextView,通常如何相機不正常纔會顯示TextView,一般情況下都會在FrameLayout顯示相機拍攝的視頻。

<?xml version="1.0" encoding="utf-8"?>

<LinearLayout xmlns:android="http://schemas.android.com/apk/res/android"

xmlns:app="http://schemas.android.com/apk/res-auto"

xmlns:tools="http://schemas.android.com/tools"

android:layout_width="match_parent"

android:layout_height="match_parent">

<FrameLayout

android:id="@+id/preview_display_layout"

android:layout_width="match_parent"

android:layout_height="match_parent">

<TextView

android:id="@+id/no_camera_access_view"

android:layout_width="match_parent"

android:layout_height="match_parent"

android:gravity="center"

android:text="相機連接失敗" />

</FrameLayout>

</LinearLayout>

MainActivity.java代碼,模型流的輸出名請查看mediapipe/examples/android/src/java/com/google/mediapipe/apps/對應的Java代碼。例如多個手的輸出流名爲multi_hand_landmarks。

public class MainActivity extends AppCompatActivity {

private static final String TAG = "MainActivity";

// 資源文件和流輸出名

private static final String BINARY_GRAPH_NAME = "hand_tracking_mobile_gpu.binarypb";

private static final String INPUT_VIDEO_STREAM_NAME = "input_video";

private static final String OUTPUT_VIDEO_STREAM_NAME = "output_video";

private static final String OUTPUT_HAND_PRESENCE_STREAM_NAME = "hand_presence";

private static final String OUTPUT_LANDMARKS_STREAM_NAME = "hand_landmarks";

private SurfaceTexture previewFrameTexture;

private SurfaceView previewDisplayView;

private EglManager eglManager;

private FrameProcessor processor;

private ExternalTextureConverter converter;

private CameraXPreviewHelper cameraHelper;

private boolean handPresence;

// 所使用的攝像頭

private static final boolean USE_FRONT_CAMERA = false;

// 因爲OpenGL表示圖像時假設圖像原點在左下角,而MediaPipe通常假設圖像原點在左上角,所以要翻轉

private static final boolean FLIP_FRAMES_VERTICALLY = true;

// 加載動態庫

static {

System.loadLibrary("mediapipe_jni");

System.loadLibrary("opencv_java3");

}

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

previewDisplayView = new SurfaceView(this);

setupPreviewDisplayView();

// 獲取權限

PermissionHelper.checkAndRequestCameraPermissions(this);

// 初始化assets管理器,以便MediaPipe應用資源

AndroidAssetUtil.initializeNativeAssetManager(this);

eglManager = new EglManager(null);

// 通過加載獲取一個幀處理器

processor = new FrameProcessor(this,

eglManager.getNativeContext(),

BINARY_GRAPH_NAME,

INPUT_VIDEO_STREAM_NAME,

OUTPUT_VIDEO_STREAM_NAME);

processor.getVideoSurfaceOutput().setFlipY(FLIP_FRAMES_VERTICALLY);

// 獲取是否檢測到手模型輸出

processor.addPacketCallback(

OUTPUT_HAND_PRESENCE_STREAM_NAME,

(packet) -> {

handPresence = PacketGetter.getBool(packet);

if (!handPresence) {

Log.d(TAG, "[TS:" + packet.getTimestamp() + "] Hand presence is false, no hands detected.");

}

});

// 獲取手的關鍵點模型輸出

processor.addPacketCallback(

OUTPUT_LANDMARKS_STREAM_NAME,

(packet) -> {

byte[] landmarksRaw = PacketGetter.getProtoBytes(packet);

try {

NormalizedLandmarkList landmarks = NormalizedLandmarkList.parseFrom(landmarksRaw);

if (landmarks == null || !handPresence) {

Log.d(TAG, "[TS:" + packet.getTimestamp() + "] No hand landmarks.");

return;

}

// 如果沒有檢測到手,輸出的關鍵點是無效的

Log.d(TAG,

"[TS:" + packet.getTimestamp()

+ "] #Landmarks for hand: "

+ landmarks.getLandmarkCount());

Log.d(TAG, getLandmarksDebugString(landmarks));

} catch (InvalidProtocolBufferException e) {

Log.e(TAG, "Couldn't Exception received - " + e);

}

});

}

@Override

protected void onResume() {

super.onResume();

converter = new ExternalTextureConverter(eglManager.getContext());

converter.setFlipY(FLIP_FRAMES_VERTICALLY);

converter.setConsumer(processor);

if (PermissionHelper.cameraPermissionsGranted(this)) {

startCamera();

}

}

@Override

protected void onPause() {

super.onPause();

converter.close();

}

@Override

public void onRequestPermissionsResult(int requestCode, @NonNull String[] permissions, @NonNull int[] grantResults) {

super.onRequestPermissionsResult(requestCode, permissions, grantResults);

PermissionHelper.onRequestPermissionsResult(requestCode, permissions, grantResults);

}

// 計算最佳的預覽大小

protected Size computeViewSize(int width, int height) {

return new Size(width, height);

}

protected void onPreviewDisplaySurfaceChanged(SurfaceHolder holder, int format, int width, int height) {

// 設置預覽大小

Size viewSize = computeViewSize(width, height);

Size displaySize = cameraHelper.computeDisplaySizeFromViewSize(viewSize);

// 根據是否旋轉調整預覽圖像大小

boolean isCameraRotated = cameraHelper.isCameraRotated();

converter.setSurfaceTextureAndAttachToGLContext(

previewFrameTexture,

isCameraRotated ? displaySize.getHeight() : displaySize.getWidth(),

isCameraRotated ? displaySize.getWidth() : displaySize.getHeight());

}

private void setupPreviewDisplayView() {

previewDisplayView.setVisibility(View.GONE);

ViewGroup viewGroup = findViewById(R.id.preview_display_layout);

viewGroup.addView(previewDisplayView);

previewDisplayView

.getHolder()

.addCallback(

new SurfaceHolder.Callback() {

@Override

public void surfaceCreated(SurfaceHolder holder) {

processor.getVideoSurfaceOutput().setSurface(holder.getSurface());

}

@Override

public void surfaceChanged(SurfaceHolder holder, int format, int width, int height) {

onPreviewDisplaySurfaceChanged(holder, format, width, height);

}

@Override

public void surfaceDestroyed(SurfaceHolder holder) {

processor.getVideoSurfaceOutput().setSurface(null);

}

});

}

// 相機啓動後事件

protected void onCameraStarted(SurfaceTexture surfaceTexture) {

// 顯示預覽

previewFrameTexture = surfaceTexture;

previewDisplayView.setVisibility(View.VISIBLE);

}

// 設置相機大小

protected Size cameraTargetResolution() {

return null;

}

// 啓動相機

public void startCamera() {

cameraHelper = new CameraXPreviewHelper();

cameraHelper.setOnCameraStartedListener(this::onCameraStarted);

CameraHelper.CameraFacing cameraFacing =

USE_FRONT_CAMERA ? CameraHelper.CameraFacing.FRONT : CameraHelper.CameraFacing.BACK;

cameraHelper.startCamera(this, cameraFacing, null, cameraTargetResolution());

}

// 解析關鍵點

private static String getLandmarksDebugString(NormalizedLandmarkList landmarks) {

int landmarkIndex = 0;

StringBuilder landmarksString = new StringBuilder();

for (NormalizedLandmark landmark : landmarks.getLandmarkList()) {

landmarksString.append("\t\tLandmark[").append(landmarkIndex).append("]: (").append(landmark.getX()).append(", ").append(landmark.getY()).append(", ").append(landmark.getZ()).append(")\n");

++landmarkIndex;

}

return landmarksString.toString();

}

}

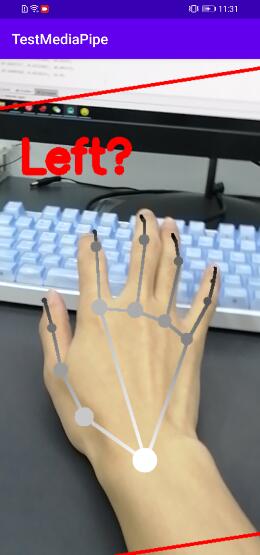

效果圖如下:

源碼下載地址:點擊下載