# 項目介紹

本項目是一個語音情感識別項目,目前效果一般,供大家學習使用。後面會持續優化,提高準確率,如果同學們有好的建議,也歡迎來探討。

源碼地址:SpeechEmotionRecognition-PaddlePaddle

使用準備¶

- Anaconda 3

- Python 3.8

- PaddlePaddle 2.4.0

- Windows 10 or Ubuntu 18.04

模型測試表¶

| 模型 | Params(M) | 預處理方法 | 數據集 | 類別數量 | 準確率 |

|---|---|---|---|---|---|

| BidirectionalLSTM | 1.8 | Flank | RAVDESS | 8 | 0.95193 |

說明:

1. RAVDESS數據集只使用Audio_Speech_Actors_01-24.zip

安裝環境¶

- 首先安裝的是PaddlePaddle的GPU版本,如果已經安裝過了,請跳過。

conda install paddlepaddle-gpu==2.4.0 cudatoolkit=10.2 --channel https://mirrors.tuna.tsinghua.edu.cn/anaconda/cloud/Paddle/

- 安裝ppser庫。

使用pip安裝,命令如下:

python -m pip install ppser -U -i https://pypi.tuna.tsinghua.edu.cn/simple

建議源碼安裝,源碼安裝能保證使用最新代碼。

git clone https://github.com/yeyupiaoling/SpeechEmotionRecognition-PaddlePaddle.git

cd SpeechEmotionRecognition-PaddlePaddle/

python setup.py install

準備數據¶

生成數據列表,用於下一步的讀取需要,項目默認提供一個數據集RAVDESS,下載這個數據集並解壓到dataset目錄下。

生成數據列表,用於下一步的讀取需要,項目默認提供一個數據集RAVDESS,這個數據集的介紹頁面,這個數據包含中性、平靜、快樂、悲傷、憤怒、恐懼、厭惡、驚訝八種情感,本項目只使用裏面的Audio_Speech_Actors_01-24.zip,數據集,說話的語句只有Kids are talking by the door和Dogs are sitting by the door,可以說這個訓練集是非常簡單的。下載這個數據集並解壓到dataset目錄下。

python create_data.py

如果自定義數據集,可以按照下面格式,audio_path爲音頻文件路徑,用戶需要提前把音頻數據集存放在dataset/audio目錄下,每個文件夾存放一個類別的音頻數據,每條音頻數據長度在3秒左右,如 dataset/audio/angry/······。audio是數據列表存放的位置,生成的數據類別的格式爲 音頻路徑\t音頻對應的類別標籤,音頻路徑和標籤用製表符 \t分開。讀者也可以根據自己存放數據的方式修改以下函數。

執行create_data.py裏面的get_data_list('dataset/audios', 'dataset')函數即可生成數據列表,同時也生成歸一化文件,具體看代碼。

python create_data.py

生成的列表是長這樣的,前面是音頻的路徑,後面是該音頻對應的標籤,從0開始,路徑和標籤之間用\t隔開。

dataset/Audio_Speech_Actors_01-24/Actor_13/03-01-01-01-02-01-13.wav 0

dataset/Audio_Speech_Actors_01-24/Actor_01/03-01-02-01-01-01-01.wav 1

dataset/Audio_Speech_Actors_01-24/Actor_01/03-01-03-02-01-01-01.wav 2

注意: create_data.py裏面的create_standard('configs/bi_lstm.yml')函數必須要執行的,這個是生成歸一化的文件。

訓練¶

接着就可以開始訓練模型了,創建 train.py。配置文件裏面的參數一般不需要修改,但是這幾個是需要根據自己實際的數據集進行調整的,首先最重要的就是分類大小dataset_conf.num_class,這個每個數據集的分類大小可能不一樣,根據自己的實際情況設定。然後是dataset_conf.batch_size,如果是顯存不夠的話,可以減小這個參數。

# 單卡訓練

CUDA_VISIBLE_DEVICES=0 python train.py

# 多卡訓練

python -m paddle.distributed.launch --gpus '0,1' train.py

訓練輸出日誌:

```[2023-08-18 18:48:49.662963 INFO ] utils:print_arguments:14 - ----------- 額外配置參數 -----------

[2023-08-18 18:48:49.662963 INFO ] utils:print_arguments:16 - configs: configs/bi_lstm.yml

[2023-08-18 18:48:49.662963 INFO ] utils:print_arguments:16 - local_rank: 0

[2023-08-18 18:48:49.662963 INFO ] utils:print_arguments:16 - pretrained_model: None

[2023-08-18 18:48:49.662963 INFO ] utils:print_arguments:16 - resume_model: None

[2023-08-18 18:48:49.662963 INFO ] utils:print_arguments:16 - save_model_path: models/

[2023-08-18 18:48:49.662963 INFO ] utils:print_arguments:16 - use_gpu: True

[2023-08-18 18:48:49.662963 INFO ] utils:print_arguments:17 - ------------------------------------------------

[2023-08-18 18:48:49.680176 INFO ] utils:print_arguments:19 - ----------- 配置文件參數 -----------

[2023-08-18 18:48:49.681177 INFO ] utils:print_arguments:22 - dataset_conf:

[2023-08-18 18:48:49.681177 INFO ] utils:print_arguments:25 - aug_conf:

[2023-08-18 18:48:49.681177 INFO ] utils:print_arguments:27 - noise_aug_prob: 0.2

[2023-08-18 18:48:49.681177 INFO ] utils:print_arguments:27 - noise_dir: dataset/noise

[2023-08-18 18:48:49.681177 INFO ] utils:print_arguments:27 - speed_perturb: True

[2023-08-18 18:48:49.681177 INFO ] utils:print_arguments:27 - volume_aug_prob: 0.2

[2023-08-18 18:48:49.681177 INFO ] utils:print_arguments:27 - volume_perturb: False

[2023-08-18 18:48:49.681177 INFO ] utils:print_arguments:25 - dataLoader:

[2023-08-18 18:48:49.681177 INFO ] utils:print_arguments:27 - batch_size: 32

[2023-08-18 18:48:49.681177 INFO ] utils:print_arguments:27 - num_workers: 4

[2023-08-18 18:48:49.681177 INFO ] utils:print_arguments:29 - do_vad: False

[2023-08-18 18:48:49.681177 INFO ] utils:print_arguments:25 - eval_conf:

[2023-08-18 18:48:49.681177 INFO ] utils:print_arguments:27 - batch_size: 1

[2023-08-18 18:48:49.681177 INFO ] utils:print_arguments:27 - max_duration: 3

[2023-08-18 18:48:49.681177 INFO ] utils:print_arguments:29 - label_list_path: dataset/label_list.txt

[2023-08-18 18:48:49.681177 INFO ] utils:print_arguments:29 - max_duration: 3

[2023-08-18 18:48:49.681177 INFO ] utils:print_arguments:29 - min_duration: 0.5

[2023-08-18 18:48:49.681177 INFO ] utils:print_arguments:29 - sample_rate: 16000

[2023-08-18 18:48:49.681177 INFO ] utils:print_arguments:29 - scaler_path: dataset/standard.m

[2023-08-18 18:48:49.682177 INFO ] utils:print_arguments:29 - target_dB: -20

[2023-08-18 18:48:49.682177 INFO ] utils:print_arguments:29 - test_list: dataset/test_list.txt

[2023-08-18 18:48:49.682177 INFO ] utils:print_arguments:29 - train_list: dataset/train_list.txt

[2023-08-18 18:48:49.682177 INFO ] utils:print_arguments:29 - use_dB_normalization: True

[2023-08-18 18:48:49.682177 INFO ] utils:print_arguments:22 - model_conf:

[2023-08-18 18:48:49.682177 INFO ] utils:print_arguments:29 - num_class: None

[2023-08-18 18:48:49.682177 INFO ] utils:print_arguments:22 - optimizer_conf:

[2023-08-18 18:48:49.682177 INFO ] utils:print_arguments:29 - learning_rate: 0.001

[2023-08-18 18:48:49.682177 INFO ] utils:print_arguments:29 - optimizer: Adam

[2023-08-18 18:48:49.683184 INFO ] utils:print_arguments:29 - scheduler: WarmupCosineSchedulerLR

[2023-08-18 18:48:49.683184 INFO ] utils:print_arguments:25 - scheduler_args:

[2023-08-18 18:48:49.683184 INFO ] utils:print_arguments:27 - max_lr: 0.001

[2023-08-18 18:48:49.683184 INFO ] utils:print_arguments:27 - min_lr: 1e-05

[2023-08-18 18:48:49.683184 INFO ] utils:print_arguments:27 - warmup_epoch: 5

[2023-08-18 18:48:49.683184 INFO ] utils:print_arguments:29 - weight_decay: 1e-06

[2023-08-18 18:48:49.683184 INFO ] utils:print_arguments:22 - preprocess_conf:

[2023-08-18 18:48:49.683184 INFO ] utils:print_arguments:29 - feature_method: CustomFeatures

[2023-08-18 18:48:49.683184 INFO ] utils:print_arguments:22 - train_conf:

[2023-08-18 18:48:49.683184 INFO ] utils:print_arguments:29 - enable_amp: False

[2023-08-18 18:48:49.683184 INFO ] utils:print_arguments:29 - log_interval: 10

[2023-08-18 18:48:49.683184 INFO ] utils:print_arguments:29 - max_epoch: 60

[2023-08-18 18:48:49.683184 INFO ] utils:print_arguments:31 - use_model: BidirectionalLSTM

[2023-08-18 18:48:49.683184 INFO ] utils:print_arguments:32 - ------------------------------------------------

[2023-08-18 18:48:49.683184 WARNING] trainer:init:66 - Windows系統不支持多線程讀取數據,已自動關閉!

Layer (type) Input Shape Output Shape Param #¶

Linear-1 [[1, 312]] [1, 512] 160,256

LSTM-1 [[1, 1, 512]] [[1, 1, 512], [[2, 1, 256], [2, 1, 256]]] 1,576,960

Tanh-1 [[1, 512]] [1, 512] 0

Dropout-1 [[1, 512]] [1, 512] 0

Linear-2 [[1, 512]] [1, 256] 131,328

ReLU-1 [[1, 256]] [1, 256] 0

Linear-3 [[1, 256]] [1, 6] 1,542

================================================================================================

Total params: 1,870,086

Trainable params: 1,870,086

Non-trainable params: 0

Input size (MB): 0.00

Forward/backward pass size (MB): 0.03

Params size (MB): 7.13

Estimated Total Size (MB): 7.16

[2023-08-18 18:48:51.425936 INFO ] trainer:train:378 - 訓練數據:4407

[2023-08-18 18:48:53.526136 INFO ] trainer:__train_epoch:331 - Train epoch: [1/60], batch: [0/138], loss: 1.80256, accuracy: 0.15625, learning rate: 0.00001000, speed: 15.24 data/sec, eta: 4:49:49

····················

# 評估

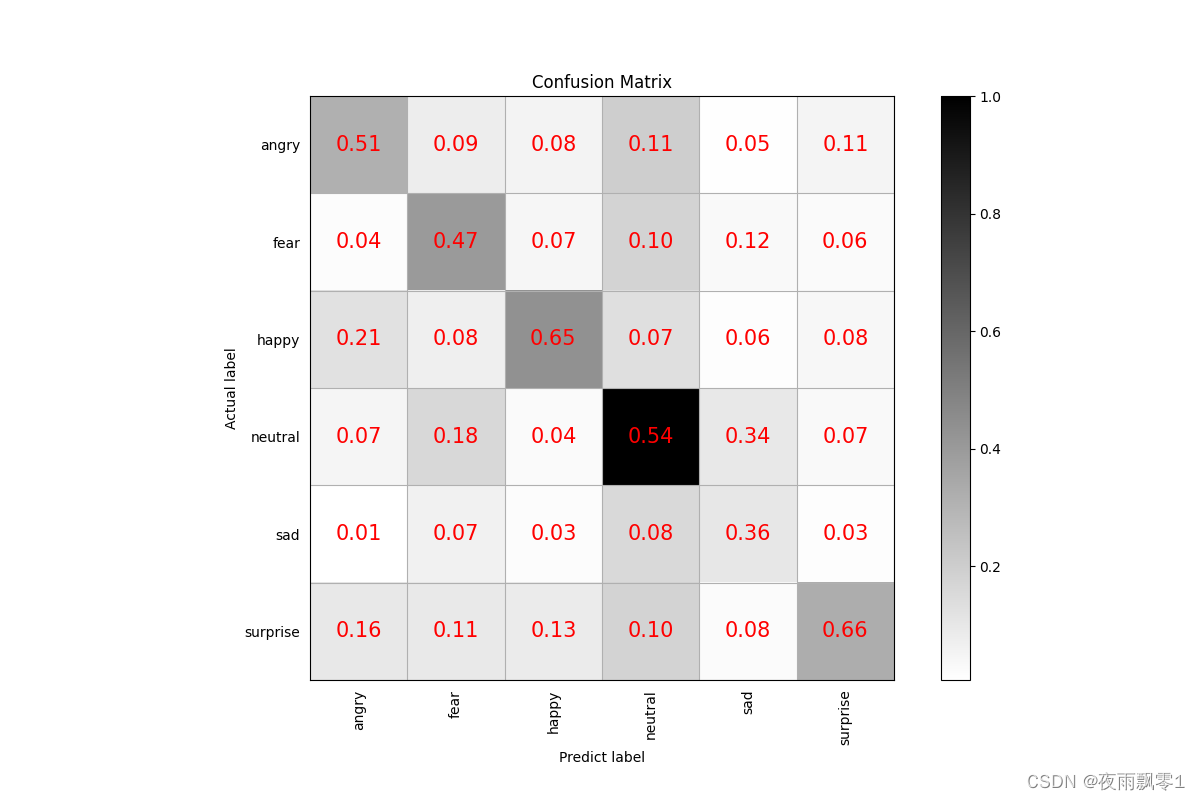

每輪訓練結束可以執行評估,評估會出來輸出準確率,還保存了混合矩陣圖片,保存路徑`output/images/`,如下。

# 預測

在訓練結束之後,我們得到了一個模型參數文件,我們使用這個模型預測音頻。

```shell

python infer.py --audio_path=dataset/test.wav

參考資料¶

- https://github.com/yeyupiaoling/AudioClassification-PaddlePaddle