前言¶

在之前筆者有介紹過《在Android設備上使用PaddleMobile實現圖像分類》,使用的框架是百度開源的PaddleMobile。在本章中,筆者將會介紹使用騰訊的開源手機深度學習框架ncnn來實現在Android手機實現圖像分類,這個框架開源時間比較長,相對穩定很多。

ncnn的GitHub地址:https://github.com/Tencent/ncnn

使用Ubuntu編譯ncnn庫¶

1、首先要下載和解壓NDK。

wget https://dl.google.com/android/repository/android-ndk-r17b-linux-x86_64.zip

unzip android-ndk-r17b-linux-x86_64.zip

2、設置NDK環境變量,目錄是NDK的解壓目錄。

export ANDROID_NDK="/home/test/paddlepaddle/android-ndk-r17b"

設置好之後,可以使用以下的命令查看配置情況。

root@test:/home/test/paddlepaddle# echo $NDK_ROOT

/home/test/paddlepaddle/android-ndk-r17b

3、安裝cmake,需要安裝較高版本的,筆者的cmake版本是3.11.2。

下載cmake源碼

wget https://cmake.org/files/v3.11/cmake-3.11.2.tar.gz

解壓cmake源碼

tar -zxvf cmake-3.11.2.tar.gz

進入到cmake源碼根目錄,並執行bootstrap。

cd cmake-3.11.2

./bootstrap

最後執行以下兩條命令開始安裝cmake。

make

make install

安裝完成之後,可以使用cmake –version是否安裝成功。

root@test:/home/test/paddlepaddle# cmake --version

cmake version 3.11.2

CMake suite maintained and supported by Kitware (kitware.com/cmake).

4、克隆ncnn源碼。

git clone https://github.com/Tencent/ncnn.git

5、編譯源碼。

# 進入到ncnn源碼根目錄下

cd ncnn

# 創建一個新的文件夾

mkdir -p build-android-armv7

# 進入到該文件夾中

cd build-android-armv7

# 執行編譯命令

cmake -DCMAKE_TOOLCHAIN_FILE=$ANDROID_NDK/build/cmake/android.toolchain.cmake \

-DANDROID_ABI="armeabi-v7a" -DANDROID_ARM_NEON=ON \

-DANDROID_PLATFORM=android-14 ..

# 這裏筆者使用4個行程並行編譯

make -j4

make install

6、編譯完成,會在build-android-armv7目錄下生成一個install目錄,我們編譯得到的文件都在該文件夾下:

include調用ncnn所需的頭文件,該文件夾會存放在Android項目的src/main/cpp目錄下;lib編譯得到的ncnn庫libncnn.a,之後會存放在Android項目的src/main/jniLibs/armeabi-v7a/libncnn.a

轉換預測模型¶

1、克隆Caffe源碼。

git clone https://github.com/BVLC/caffe.git

2、編譯Caffe源碼。

# 切換到Caffe目錄

cd caffe

# 在當前目錄執行cmake

cmake .

# 使用4個線程編譯

make -j4

make install

3、升級Caffe模型。

# 把需要轉換的模型複製到caffe/tools,並切入到該目錄

cd tools

# 升級Caffe模型

./upgrade_net_proto_text mobilenet_v2_deploy.prototxt mobilenet_v2_deploy_new.prototxt

./upgrade_net_proto_binary mobilenet_v2.caffemodel mobilenet_v2_new.caffemodel

4、檢查模型配置文件,因爲只能一張一張圖片預測,所以輸入要設置爲dim: 1。

name: "MOBILENET_V2"

layer {

name: "input"

type: "Input"

top: "data"

input_param {

shape {

dim: 1

dim: 3

dim: 224

dim: 224

}

}

}

5、切換到ncnn的根目錄,就是我們上一部分克隆的ncnn源碼。

cd ncnn/

6、在根目錄下編譯ncnn源碼。

mkdir -p build

cd build

cmake ..

make -j4

make install

7、把新的Caffe模型轉換成NCNN模型。

# 經過上一步,會生產一個tools,我們進入到以下目錄

cd tools/caffe/

# 把已經升級的網絡定義文件和權重文件複製到當目錄,並執行以下命令

./caffe2ncnn mobilenet_v2_deploy_new.prototxt mobilenet_v2_new.caffemodel mobilenet_v2.param mobilenet_v2.bin

8、對象模型參數進行加密,這樣就算別人反編譯我們的apk也用不了我們的模型文件。把上一步獲得的mobilenet_v2.param、mobilenet_v2.bin複製到該目錄的上一個目錄,也就是tools目錄。

# 切換到上一個目錄

cd ../

# 執行命令之後會生成mobilenet_v2.param、mobilenet_v2.id.h、mobilenet_v2.mem.h

./ncnn2mem mobilenet_v2.param mobilenet_v2.bin mobilenet_v2.id.h mobilenet_v2.mem.h

經過上面的步驟,得到的文件中,以下文件時需要的:

mobilenet_v2.param.bin網絡的模型參數;mobilenet_v2.bin網絡的權重;mobilenet_v2.id.h在預測圖片的時候使用到。

開發Android項目¶

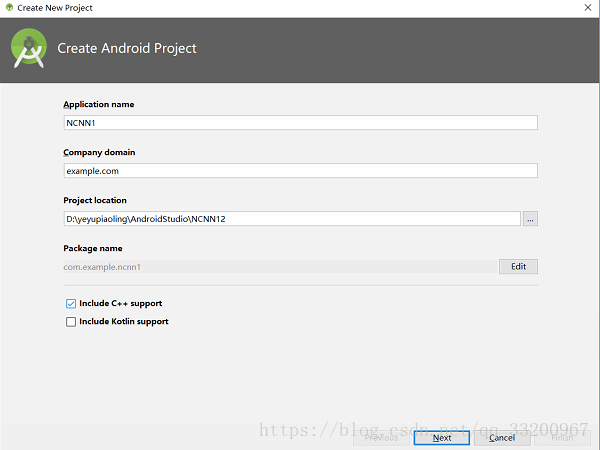

- 我們在Android Studio上創建一個NCNN1的項目,別忘了選擇C++支持。

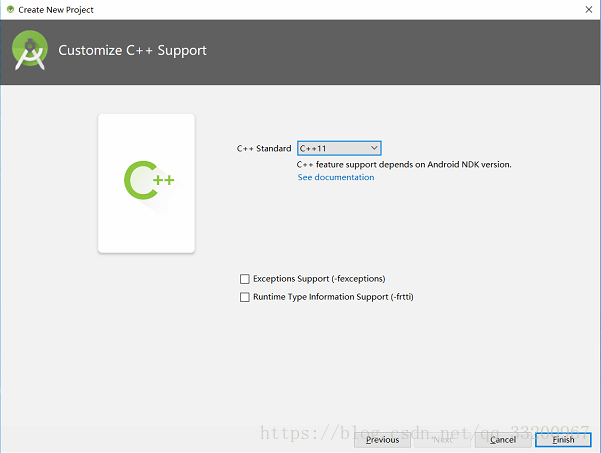

其他的可以直接默認就可以了,在這裏要注意選擇C++11支持。

- 在

main目錄下創建assets目錄,並複製以下目錄到該目錄: mobilenet_v2.param.bin上一步獲取網絡的模型參數;mobilenet_v2.bin上一步獲取網絡的權重;-

synset.txtlabel對應的名稱,下載地址:https://github.com/shicai/MobileNet-Caffe/blob/master/synset.txt。 -

在

cpp目錄下複製在使用Ubuntu編譯NCNN庫部分編譯得到的include文件夾,包括裏面的C++頭文件。 - 把

mobilenet_v2.id.h複製到cpp目錄下。 - 在main目錄下創建

jniLibs/armeabi-v7a/目錄,並把使用Ubuntu編譯NCNN庫部分編譯得到的libncnn.a複製到該目錄。 - 在

cpp目錄下創建一個C++文件,並編寫以下代碼,這段代碼是用於加載模型和預測圖片的:

#include <android/bitmap.h>

#include <android/log.h>

#include <jni.h>

#include <string>

#include <vector>

// ncnn

#include "include/net.h"

#include "mobilenet_v2.id.h"

#include <sys/time.h>

#include <unistd.h>

static ncnn::UnlockedPoolAllocator g_blob_pool_allocator;

static ncnn::PoolAllocator g_workspace_pool_allocator;

static ncnn::Mat ncnn_param;

static ncnn::Mat ncnn_bin;

static ncnn::Net ncnn_net;

extern "C" {

// public native boolean Init(byte[] param, byte[] bin, byte[] words);

JNIEXPORT jboolean JNICALL

Java_com_example_ncnn1_NcnnJni_Init(JNIEnv *env, jobject thiz, jbyteArray param, jbyteArray bin) {

// init param

{

int len = env->GetArrayLength(param);

ncnn_param.create(len, (size_t) 1u);

env->GetByteArrayRegion(param, 0, len, (jbyte *) ncnn_param);

int ret = ncnn_net.load_param((const unsigned char *) ncnn_param);

__android_log_print(ANDROID_LOG_DEBUG, "NcnnJni", "load_param %d %d", ret, len);

}

// init bin

{

int len = env->GetArrayLength(bin);

ncnn_bin.create(len, (size_t) 1u);

env->GetByteArrayRegion(bin, 0, len, (jbyte *) ncnn_bin);

int ret = ncnn_net.load_model((const unsigned char *) ncnn_bin);

__android_log_print(ANDROID_LOG_DEBUG, "NcnnJni", "load_model %d %d", ret, len);

}

ncnn::Option opt;

opt.lightmode = true;

opt.num_threads = 4;

opt.blob_allocator = &g_blob_pool_allocator;

opt.workspace_allocator = &g_workspace_pool_allocator;

ncnn::set_default_option(opt);

return JNI_TRUE;

}

// public native String Detect(Bitmap bitmap);

JNIEXPORT jfloatArray JNICALL Java_com_example_ncnn1_NcnnJni_Detect(JNIEnv* env, jobject thiz, jobject bitmap)

{

// ncnn from bitmap

ncnn::Mat in;

{

AndroidBitmapInfo info;

AndroidBitmap_getInfo(env, bitmap, &info);

int width = info.width;

int height = info.height;

if (info.format != ANDROID_BITMAP_FORMAT_RGBA_8888)

return NULL;

void* indata;

AndroidBitmap_lockPixels(env, bitmap, &indata);

// 把像素轉換成data,並指定通道順序

in = ncnn::Mat::from_pixels((const unsigned char*)indata, ncnn::Mat::PIXEL_RGBA2BGR, width, height);

AndroidBitmap_unlockPixels(env, bitmap);

}

// ncnn_net

std::vector<float> cls_scores;

{

// 減去均值和乘上比例

const float mean_vals[3] = {103.94f, 116.78f, 123.68f};

const float scale[3] = {0.017f, 0.017f, 0.017f};

in.substract_mean_normalize(mean_vals, scale);

ncnn::Extractor ex = ncnn_net.create_extractor();

// 如果時不加密是使用ex.input("data", in);

ex.input(mobilenet_v2_param_id::BLOB_data, in);

ncnn::Mat out;

// 如果時不加密是使用ex.extract("prob", out);

ex.extract(mobilenet_v2_param_id::BLOB_prob, out);

int output_size = out.w;

jfloat *output[output_size];

for (int j = 0; j < out.w; j++) {

output[j] = &out[j];

}

jfloatArray jOutputData = env->NewFloatArray(output_size);

if (jOutputData == nullptr) return nullptr;

env->SetFloatArrayRegion(jOutputData, 0, output_size,

reinterpret_cast<const jfloat *>(*output)); // copy

return jOutputData;

}

}

}

- 在項目包

com.example.ncnn1下,修改MainActivity.java中的代碼,修改如下:

package com.example.ncnn1;

import android.Manifest;

import android.app.Activity;

import android.content.Intent;

import android.content.pm.PackageManager;

import android.content.res.AssetManager;

import android.graphics.Bitmap;

import android.graphics.BitmapFactory;

import android.net.Uri;

import android.os.Bundle;

import android.support.annotation.NonNull;

import android.support.annotation.Nullable;

import android.support.v4.app.ActivityCompat;

import android.support.v4.content.ContextCompat;

import android.text.method.ScrollingMovementMethod;

import android.util.Log;

import android.view.View;

import android.widget.Button;

import android.widget.ImageView;

import android.widget.TextView;

import android.widget.Toast;

import com.bumptech.glide.Glide;

import com.bumptech.glide.load.engine.DiskCacheStrategy;

import com.bumptech.glide.request.RequestOptions;

import java.io.BufferedReader;

import java.io.FileNotFoundException;

import java.io.IOException;

import java.io.InputStream;

import java.io.InputStreamReader;

import java.util.ArrayList;

import java.util.Arrays;

import java.util.List;

public class MainActivity extends Activity {

private static final String TAG = MainActivity.class.getName();

private static final int USE_PHOTO = 1001;

private String camera_image_path;

private ImageView show_image;

private TextView result_text;

private boolean load_result = false;

private int[] ddims = {1, 3, 224, 224};

private int model_index = 1;

private List<String> resultLabel = new ArrayList<>();

private NcnnJni squeezencnn = new NcnnJni();

@Override

public void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

try {

initSqueezeNcnn();

} catch (IOException e) {

Log.e("MainActivity", "initSqueezeNcnn error");

}

init_view();

readCacheLabelFromLocalFile();

}

private void initSqueezeNcnn() throws IOException {

byte[] param = null;

byte[] bin = null;

{

InputStream assetsInputStream = getAssets().open("mobilenet_v2.param.bin");

int available = assetsInputStream.available();

param = new byte[available];

int byteCode = assetsInputStream.read(param);

assetsInputStream.close();

}

{

InputStream assetsInputStream = getAssets().open("mobilenet_v2.bin");

int available = assetsInputStream.available();

bin = new byte[available];

int byteCode = assetsInputStream.read(bin);

assetsInputStream.close();

}

load_result = squeezencnn.Init(param, bin);

Log.d("load model", "result:" + load_result);

}

// initialize view

private void init_view() {

request_permissions();

show_image = (ImageView) findViewById(R.id.show_image);

result_text = (TextView) findViewById(R.id.result_text);

result_text.setMovementMethod(ScrollingMovementMethod.getInstance());

Button use_photo = (Button) findViewById(R.id.use_photo);

// use photo click

use_photo.setOnClickListener(new View.OnClickListener() {

@Override

public void onClick(View view) {

if (!load_result) {

Toast.makeText(MainActivity.this, "never load model", Toast.LENGTH_SHORT).show();

return;

}

PhotoUtil.use_photo(MainActivity.this, USE_PHOTO);

}

});

}

// load label's name

private void readCacheLabelFromLocalFile() {

try {

AssetManager assetManager = getApplicationContext().getAssets();

BufferedReader reader = new BufferedReader(new InputStreamReader(assetManager.open("synset.txt")));

String readLine = null;

while ((readLine = reader.readLine()) != null) {

resultLabel.add(readLine);

}

reader.close();

} catch (Exception e) {

Log.e("labelCache", "error " + e);

}

}

@Override

protected void onActivityResult(int requestCode, int resultCode, @Nullable Intent data) {

String image_path;

RequestOptions options = new RequestOptions().skipMemoryCache(true).diskCacheStrategy(DiskCacheStrategy.NONE);

if (resultCode == Activity.RESULT_OK) {

switch (requestCode) {

case USE_PHOTO:

if (data == null) {

Log.w(TAG, "user photo data is null");

return;

}

Uri image_uri = data.getData();

Glide.with(MainActivity.this).load(image_uri).apply(options).into(show_image);

// get image path from uri

image_path = PhotoUtil.get_path_from_URI(MainActivity.this, image_uri);

// predict image

predict_image(image_path);

break;

}

}

}

// predict image

private void predict_image(String image_path) {

// picture to float array

Bitmap bmp = PhotoUtil.getScaleBitmap(image_path);

Bitmap rgba = bmp.copy(Bitmap.Config.ARGB_8888, true);

// resize to 227x227

Bitmap input_bmp = Bitmap.createScaledBitmap(rgba, ddims[2], ddims[3], false);

try {

// Data format conversion takes too long

// Log.d("inputData", Arrays.toString(inputData));

long start = System.currentTimeMillis();

// get predict result

float[] result = squeezencnn.Detect(input_bmp);

long end = System.currentTimeMillis();

Log.d(TAG, "origin predict result:" + Arrays.toString(result));

long time = end - start;

Log.d("result length", String.valueOf(result.length));

// show predict result and time

int r = get_max_result(result);

String show_text = "result:" + r + "\nname:" + resultLabel.get(r) + "\nprobability:" + result[r] + "\ntime:" + time + "ms";

result_text.setText(show_text);

} catch (Exception e) {

e.printStackTrace();

}

}

// get max probability label

private int get_max_result(float[] result) {

float probability = result[0];

int r = 0;

for (int i = 0; i < result.length; i++) {

if (probability < result[i]) {

probability = result[i];

r = i;

}

}

return r;

}

// request permissions

private void request_permissions() {

List<String> permissionList = new ArrayList<>();

if (ContextCompat.checkSelfPermission(this, Manifest.permission.CAMERA) != PackageManager.PERMISSION_GRANTED) {

permissionList.add(Manifest.permission.CAMERA);

}

if (ContextCompat.checkSelfPermission(this, Manifest.permission.WRITE_EXTERNAL_STORAGE) != PackageManager.PERMISSION_GRANTED) {

permissionList.add(Manifest.permission.WRITE_EXTERNAL_STORAGE);

}

if (ContextCompat.checkSelfPermission(this, Manifest.permission.READ_EXTERNAL_STORAGE) != PackageManager.PERMISSION_GRANTED) {

permissionList.add(Manifest.permission.READ_EXTERNAL_STORAGE);

}

// if list is not empty will request permissions

if (!permissionList.isEmpty()) {

ActivityCompat.requestPermissions(this, permissionList.toArray(new String[permissionList.size()]), 1);

}

}

@Override

public void onRequestPermissionsResult(int requestCode, @NonNull String[] permissions, @NonNull int[] grantResults) {

super.onRequestPermissionsResult(requestCode, permissions, grantResults);

switch (requestCode) {

case 1:

if (grantResults.length > 0) {

for (int i = 0; i < grantResults.length; i++) {

int grantResult = grantResults[i];

if (grantResult == PackageManager.PERMISSION_DENIED) {

String s = permissions[i];

Toast.makeText(this, s + " permission was denied", Toast.LENGTH_SHORT).show();

}

}

}

break;

}

}

}

- 同樣在項目的包

com.example.ncnn1下,創建一個NcnnJni.java類,用於提供JNI接口,代碼如下:

package com.example.ncnn1;

import android.graphics.Bitmap;

public class NcnnJni

{

public native boolean Init(byte[] param, byte[] bin);

public native float[] Detect(Bitmap bitmap);

static {

System.loadLibrary("ncnn_jni");

}

}

- 還是在項目的包

com.example.ncnn1下,創建一個PhotoUtil.java類,這個是圖片的工具類,代碼如下:

package com.example.ncnn1;

import android.app.Activity;

import android.content.Context;

import android.content.Intent;

import android.database.Cursor;

import android.graphics.Bitmap;

import android.graphics.BitmapFactory;

import android.net.Uri;

import android.provider.MediaStore;

import java.nio.FloatBuffer;

public class PhotoUtil {

// get picture in photo

public static void use_photo(Activity activity, int requestCode) {

Intent intent = new Intent(Intent.ACTION_PICK);

intent.setType("image/*");

activity.startActivityForResult(intent, requestCode);

}

// get photo from Uri

public static String get_path_from_URI(Context context, Uri uri) {

String result;

Cursor cursor = context.getContentResolver().query(uri, null, null, null, null);

if (cursor == null) {

result = uri.getPath();

} else {

cursor.moveToFirst();

int idx = cursor.getColumnIndex(MediaStore.Images.ImageColumns.DATA);

result = cursor.getString(idx);

cursor.close();

}

return result;

}

// compress picture

public static Bitmap getScaleBitmap(String filePath) {

BitmapFactory.Options opt = new BitmapFactory.Options();

opt.inJustDecodeBounds = true;

BitmapFactory.decodeFile(filePath, opt);

int bmpWidth = opt.outWidth;

int bmpHeight = opt.outHeight;

int maxSize = 500;

// compress picture with inSampleSize

opt.inSampleSize = 1;

while (true) {

if (bmpWidth / opt.inSampleSize < maxSize || bmpHeight / opt.inSampleSize < maxSize) {

break;

}

opt.inSampleSize *= 2;

}

opt.inJustDecodeBounds = false;

return BitmapFactory.decodeFile(filePath, opt);

}

}

- 修改啓動頁面的佈局,修改如下:

<?xml version="1.0" encoding="utf-8"?>

<RelativeLayout xmlns:android="http://schemas.android.com/apk/res/android"

xmlns:app="http://schemas.android.com/apk/res-auto"

xmlns:tools="http://schemas.android.com/tools"

android:layout_width="match_parent"

android:layout_height="match_parent"

tools:context=".MainActivity">

<LinearLayout

android:id="@+id/btn_ll"

android:layout_alignParentBottom="true"

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:orientation="horizontal">

<Button

android:id="@+id/use_photo"

android:layout_weight="1"

android:layout_width="0dp"

android:layout_height="wrap_content"

android:text="相冊" />

</LinearLayout>

<TextView

android:layout_above="@id/btn_ll"

android:id="@+id/result_text"

android:textSize="16sp"

android:layout_width="match_parent"

android:hint="預測結果會在這裏顯示"

android:layout_height="100dp" />

<ImageView

android:layout_alignParentTop="true"

android:layout_above="@id/result_text"

android:id="@+id/show_image"

android:layout_width="match_parent"

android:layout_height="match_parent" />

</RelativeLayout>

- 修改APP目錄下的

CMakeLists.txt文件,修改如下:

# For more information about using CMake with Android Studio, read the

# documentation: https://d.android.com/studio/projects/add-native-code.html

# Sets the minimum version of CMake required to build the native library.

cmake_minimum_required(VERSION 3.4.1)

# Creates and names a library, sets it as either STATIC

# or SHARED, and provides the relative paths to its source code.

# You can define multiple libraries, and CMake builds them for you.

# Gradle automatically packages shared libraries with your APK.

set(ncnn_lib ${CMAKE_SOURCE_DIR}/src/main/jniLibs/armeabi-v7a/libncnn.a)

add_library (ncnn_lib STATIC IMPORTED)

set_target_properties(ncnn_lib PROPERTIES IMPORTED_LOCATION ${ncnn_lib})

add_library( # Sets the name of the library.

ncnn_jni

# Sets the library as a shared library.

SHARED

# Provides a relative path to your source file(s).

src/main/cpp/ncnn_jni.cpp )

# Searches for a specified prebuilt library and stores the path as a

# variable. Because CMake includes system libraries in the search path by

# default, you only need to specify the name of the public NDK library

# you want to add. CMake verifies that the library exists before

# completing its build.

find_library( # Sets the name of the path variable.

log-lib

# Specifies the name of the NDK library that

# you want CMake to locate.

log )

# Specifies libraries CMake should link to your target library. You

# can link multiple libraries, such as libraries you define in this

# build script, prebuilt third-party libraries, or system libraries.

target_link_libraries( # Specifies the target library.

ncnn_jni

ncnn_lib

jnigraphics

# Links the target library to the log library

# included in the NDK.

${log-lib} )

- 修改APP目錄下的

build.gradle文件,修改如下:

apply plugin: 'com.android.application'

android {

compileSdkVersion 28

defaultConfig {

applicationId "com.example.ncnn1"

minSdkVersion 21

targetSdkVersion 28

versionCode 1

versionName "1.0"

testInstrumentationRunner "android.support.test.runner.AndroidJUnitRunner"

externalNativeBuild {

cmake {

cppFlags "-std=c++11 -fopenmp"

abiFilters "armeabi-v7a"

}

}

}

buildTypes {

release {

minifyEnabled false

proguardFiles getDefaultProguardFile('proguard-android.txt'), 'proguard-rules.pro'

}

}

externalNativeBuild {

cmake {

path "CMakeLists.txt"

}

}

sourceSets {

main {

jniLibs.srcDirs = ["src/main/jniLibs"]

jni.srcDirs = ['src/cpp']

}

}

}

dependencies {

implementation fileTree(dir: 'libs', include: ['*.jar'])

implementation 'com.android.support:appcompat-v7:28.0.0-rc02'

implementation 'com.android.support.constraint:constraint-layout:1.1.3'

testImplementation 'junit:junit:4.12'

implementation 'com.github.bumptech.glide:glide:4.3.1'

androidTestImplementation 'com.android.support.test:runner:1.0.2'

androidTestImplementation 'com.android.support.test.espresso:espresso-core:3.0.2'

}

- 最後別忘了在配置文件中添加權限。

<uses-permission android:name="android.permission.READ_EXTERNAL_STORAGE"/>

<uses-permission android:name="android.permission.WRITE_EXTERNAL_STORAGE"/>

最後的效果圖如下:

代碼傳送門: 上面已經幾乎包括所有的代碼了,爲了讀者方便直接使用,可以在這裏下載項目源代碼。

參考資料¶

- https://github.com/BVLC/caffe

- https://github.com/Tencent/ncnn/wiki/how-to-use-ncnn-with-alexnet

- https://github.com/Tencent/ncnn/wiki/how-to-build

- https://github.com/Tencent/ncnn/tree/master/examples/squeezencnn