前言¶

在之前筆者有介紹過《在Android設備上使用PaddleMobile實現圖像分類》,使用的框架是百度開源的PaddleMobile。在本章中,筆者將會介紹使用小米的開源手機深度學習框架MACE來實現在Android手機實現圖像分類。

MACE的GitHub地址:https://github.com/XiaoMi/mace

編譯MACE庫和模型¶

編譯MACE庫和模型有兩種方式,一種是在Ubuntu本地上編譯,另一種是使用docker編譯。下面就介紹使用這兩種編譯方式。

使用Ubuntu編譯¶

使用Ubuntu編譯源碼比較麻煩的是就要自己配置環境,所以下面我們就來配置一下環境。以下是官方給出的環境依賴:

所需依賴

|Software | Installation command | Tested version |

|:—: | :—:| :—: |

| Python | | 2.7 |

| Bazel | bazel installation guide | 0.13.0 |

| CMake | apt-get install cmake | >= 3.11.3 |

| Jinja2 | pip install -I jinja2==2.10 | 2.10 |

| PyYaml | pip install -I pyyaml==3.12 | 3.12.0 |

| sh | pip install -I sh==1.12.14 | 1.12.14 |

| Numpy | pip install -I numpy==1.14.0 | Required by model validation |

| six | pip install -I six==1.11.0 | Required for Python 2 and 3 compatibility (TODO) |

可選依賴

| Software | Installation command | Remark |

|:—: | :—:| :—: |

| Android NDK | NDK installation guide | Required by Android build, r15b, r15c, r16b, r17b |

| ADB | apt-get install android-tools-adb | Required by Android run, >= 1.0.32 |

| TensorFlow | pip install -I tensorflow==1.6.0 | Required by TensorFlow model |

| Docker | docker installation guide | Required by docker mode for Caffe model |

| Scipy | pip install -I scipy==1.0.0 | Required by model validation |

| FileLock | pip install -I filelock==3.0.0 | Required by run on Android |

安裝依賴環境¶

- 安裝Bazel

export BAZEL_VERSION=0.13.1

mkdir /bazel && \

cd /bazel && \

wget https://github.com/bazelbuild/bazel/releases/download/$BAZEL_VERSION/bazel-$BAZEL_VERSION-installer-linux-x86_64.sh && \

chmod +x bazel-*.sh && \

./bazel-$BAZEL_VERSION-installer-linux-x86_64.sh && \

cd / && \

rm -f /bazel/bazel-$BAZEL_VERSION-installer-linux-x86_64.sh

- 安裝Android NDK

# Download NDK r15c

cd /opt/ && \

wget -q https://dl.google.com/android/repository/android-ndk-r15c-linux-x86_64.zip && \

unzip -q android-ndk-r15c-linux-x86_64.zip && \

rm -f android-ndk-r15c-linux-x86_64.zip

export ANDROID_NDK_VERSION=r15c

export ANDROID_NDK=/opt/android-ndk-${ANDROID_NDK_VERSION}

export ANDROID_NDK_HOME=${ANDROID_NDK}

# add to PATH

export PATH=${PATH}:${ANDROID_NDK_HOME}

- 安裝其他工具

apt-get install -y --no-install-recommends \

cmake \

android-tools-adb

pip install -i http://pypi.douban.com/simple/ --trusted-host pypi.douban.com setuptools

pip install -i http://pypi.douban.com/simple/ --trusted-host pypi.douban.com \

"numpy>=1.14.0" \

scipy \

jinja2 \

pyyaml \

sh==1.12.14 \

pycodestyle==2.4.0 \

filelock

- 安裝TensorFlow

pip install -i http://pypi.douban.com/simple/ --trusted-host pypi.douban.com tensorflow==1.6.0

編譯庫和模型¶

- 克隆MACE源碼

git clone https://github.com/XiaoMi/mace.git

- 進入到官方的Android Demo上

cd mace/mace/examples/android/

- 修改當前目錄下的

build.sh,修成如下:

#!/usr/bin/env bash

set -e -u -o pipefail

pushd ../../../

TARGET_ABI=armeabi-v7a

LIBRARY_DIR=mace/examples/android/macelibrary/src/main/cpp/

INCLUDE_DIR=$LIBRARY_DIR/include/mace/public/

LIBMACE_DIR=$LIBRARY_DIR/lib/$TARGET_ABI/

rm -rf $LIBRARY_DIR/include/

mkdir -p $INCLUDE_DIR

rm -rf $LIBRARY_DIR/lib/

mkdir -p $LIBMACE_DIR

rm -rf $LIBRARY_DIR/model/

python tools/converter.py convert --config=mace/examples/android/mobilenet.yml --target_abis=$TARGET_ABI

cp -rf builds/mobilenet/include/mace/public/*.h $INCLUDE_DIR

cp -rf builds/mobilenet/model $LIBRARY_DIR

bazel build --config android --config optimization mace/libmace:libmace_static --define neon=true --define openmp=true --define opencl=true --cpu=$TARGET_ABI

cp -rf mace/public/*.h $INCLUDE_DIR

cp -rf bazel-genfiles/mace/libmace/libmace.a $LIBMACE_DIR

popd

- 修改模型的配置文件

mobilenet.yml,修改成如下,這些屬性的文件可以查看官方的文檔,各個模型的配置可以參考Mobile Model Zoo下的各個模型,以下是以爲MobileNet V2爲例。

library_name: mobilenet

target_abis: [armeabi-v7a]

model_graph_format: code

model_data_format: code

models:

mobilenet_v2:

platform: tensorflow

model_file_path: https://cnbj1.fds.api.xiaomi.com/mace/miai-models/mobilenet-v2/mobilenet-v2-1.0.pb

model_sha256_checksum: 369f9a5f38f3c15b4311c1c84c032ce868da9f371b5f78c13d3ea3c537389bb4

subgraphs:

- input_tensors:

- input

input_shapes:

- 1,224,224,3

output_tensors:

- MobilenetV2/Predictions/Reshape_1

output_shapes:

- 1,1001

runtime: cpu+gpu

limit_opencl_kernel_time: 0

nnlib_graph_mode: 0

obfuscate: 0

winograd: 0

- 開始編譯

./build.sh

- 編譯完成之後,可以在

mace/mace/examples/android/macelibrary/src/main/cpp/看到多了3個文件:

1.include是存放調用mace接口和模型配置的頭文件

2.lib是存放編譯好的mace庫

3.model是存放模型的文件夾,比如我們編譯的MobileNet V2模型

使用Docker編譯¶

- 首先安裝docker,命令如下:

apt-get install docker.io

- 拉取mace鏡像:

docker pull registry.cn-hangzhou.aliyuncs.com/xiaomimace/mace-dev

- 獲取MACE源碼,並按照上一步修改

mace/mace/examples/android/目錄下的build.sh和mobilenet.yml這個兩個文件。

git clone https://github.com/XiaoMi/mace.git

- 進入到MACE的根目錄,執行以下命令:

docker run -it -v $PWD:/mace registry.cn-hangzhou.aliyuncs.com/xiaomimace/mace-dev

- 接着執行以下命令:

cd mace/mace/examples/android/

./build.sh

執行之後便可得到跟上一步獲取的一樣的文件。使用docker就簡單很多,少了很多安裝依賴環境的步驟。

開發Android項目¶

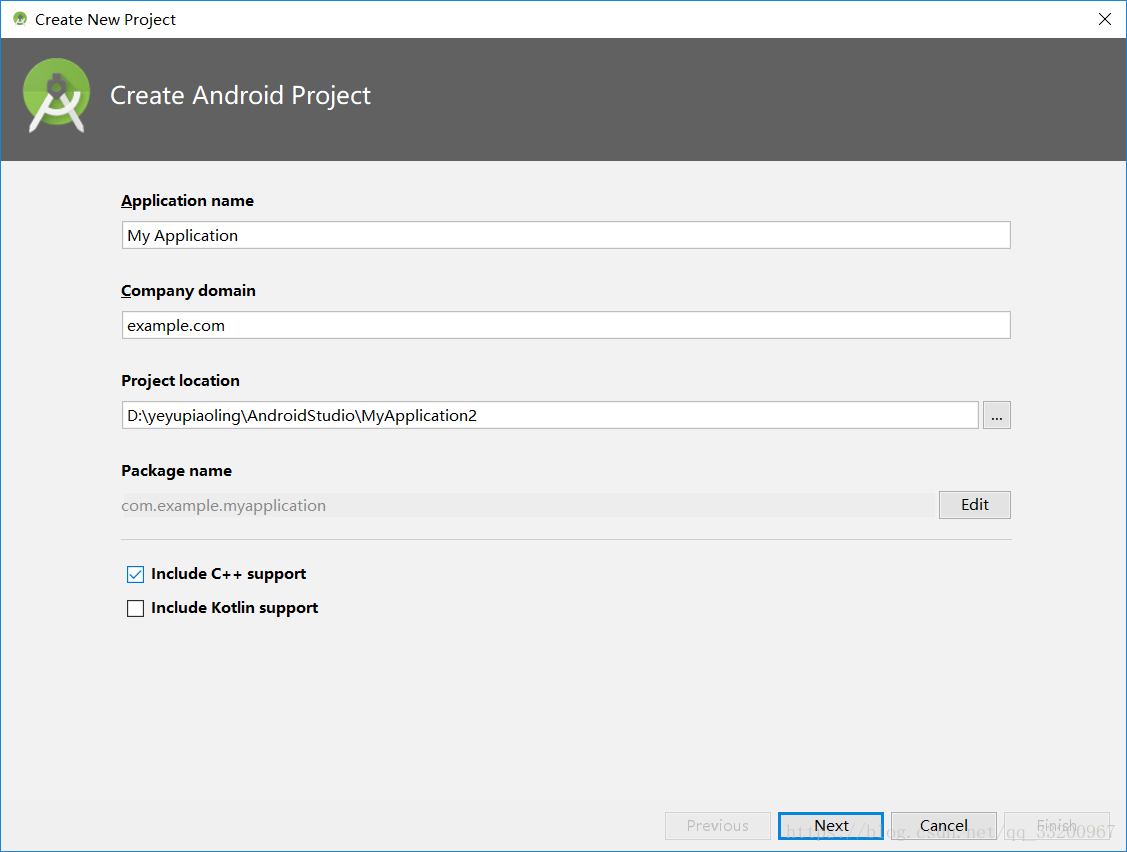

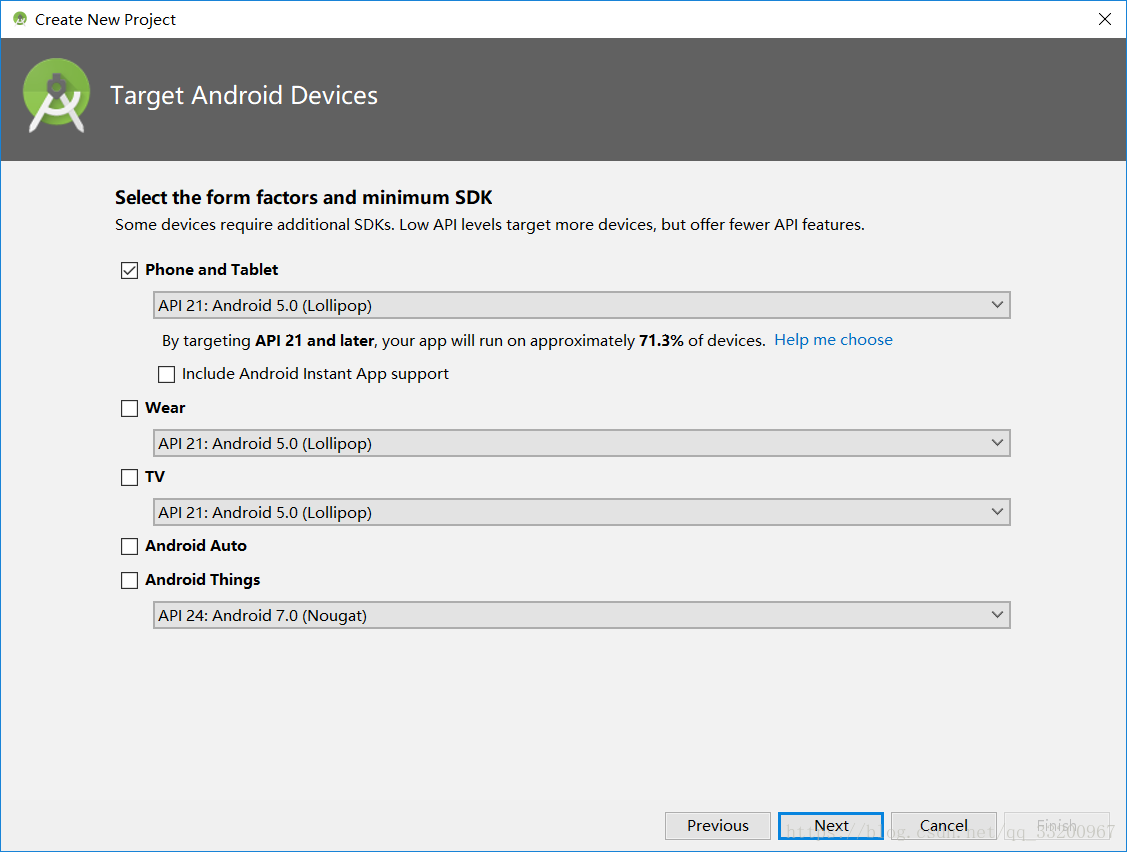

- 創建Android項目

在創建項目是要選擇C++支持。

因爲MACE最低支持版本是Android5.0,所以這裏要選擇Android5.0。

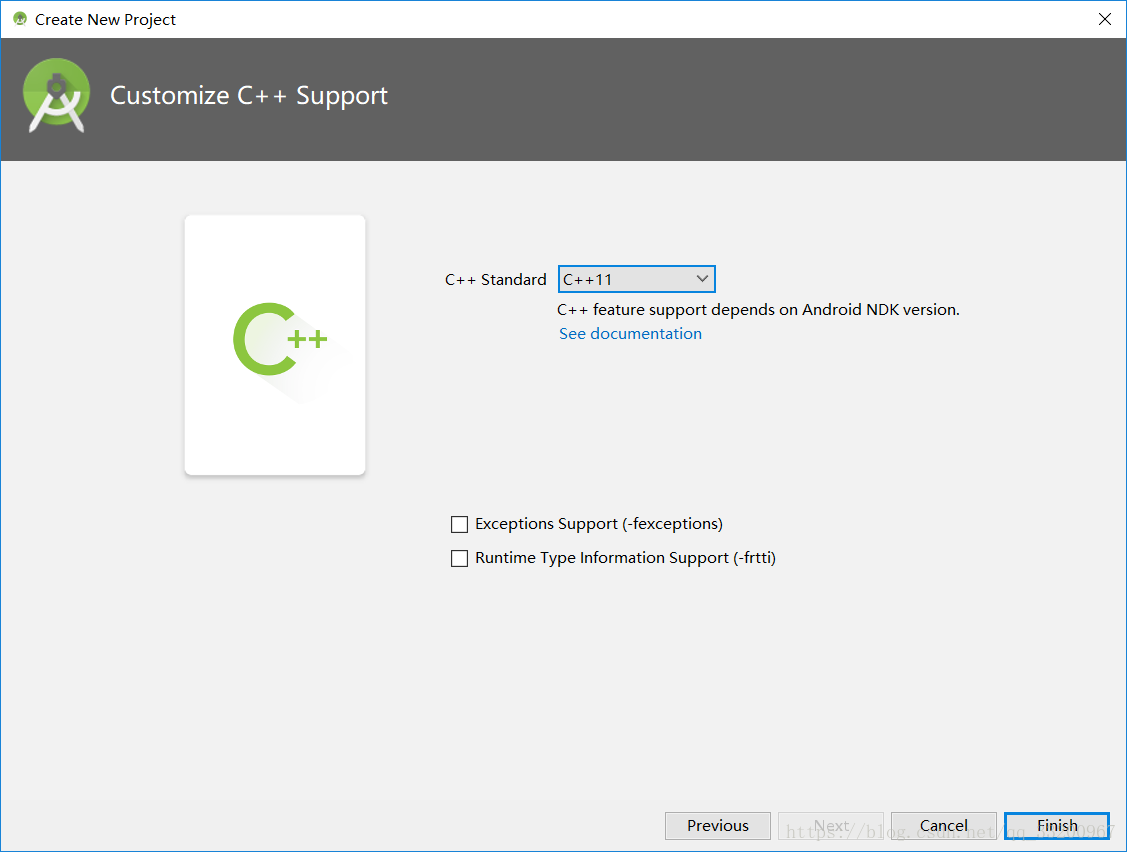

MACE使用的是C++11。

-

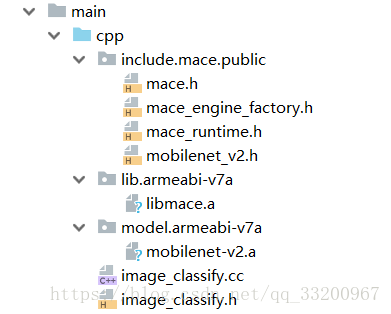

複製C++文件。刪除

cpp目錄下自動生產的C++文件,並複製上一步編譯得到的3個目錄和本來就有的兩C++文件到Android項目的cpp目錄下。如下圖:

-

修改

CMakeLists.txt編譯文件,修改如下,編譯對應的是我們上一步複製的C++文件:

# For more information about using CMake with Android Studio, read the

# documentation: https://d.android.com/studio/projects/add-native-code.html

# Sets the minimum version of CMake required to build the native library.

cmake_minimum_required(VERSION 3.4.1)

# Creates and names a library, sets it as either STATIC

# or SHARED, and provides the relative paths to its source code.

# You can define multiple libraries, and CMake builds them for you.

# Gradle automatically packages shared libraries with your APK.

#set(CMAKE_LIBRARY_OUTPUT_DIRECTORY ${PROJECT_SOURCE_DIR}/../app/libs/${ANDROID_ABI})

include_directories(${CMAKE_SOURCE_DIR}/)

include_directories(${CMAKE_SOURCE_DIR}/src/main/cpp/include)

set(mace_lib ${CMAKE_SOURCE_DIR}/src/main/cpp/lib/armeabi-v7a/libmace.a)

set(mobilenet_lib ${CMAKE_SOURCE_DIR}/src/main/cpp/model/armeabi-v7a/mobilenet.a)

add_library (mace_lib STATIC IMPORTED)

set_target_properties(mace_lib PROPERTIES IMPORTED_LOCATION ${mace_lib})

add_library (mobilenet_lib STATIC IMPORTED)

set_target_properties(mobilenet_lib PROPERTIES IMPORTED_LOCATION ${mobilenet_lib})

add_library( # Sets the name of the library.

mace_mobile_jni

# Sets the library as a shared library.

SHARED

# Provides a relative path to your source file(s).

src/main/cpp/image_classify.cc )

# Searches for a specified prebuilt library and stores the path as a

# variable. Because CMake includes system libraries in the search path by

# default, you only need to specify the name of the public NDK library

# you want to add. CMake verifies that the library exists before

# completing its build.

find_library( # Sets the name of the path variable.

log-lib

# Specifies the name of the NDK library that

# you want CMake to locate.

log )

# Specifies libraries CMake should link to your target library. You

# can link multiple libraries, such as libraries you define in this

# build script, prebuilt third-party libraries, or system libraries.

target_link_libraries( # Specifies the target library.

mace_mobile_jni

mace_lib

mobilenet_lib

# Links the target library to the log library

# included in the NDK.

${log-lib} )

- 修改

app目錄下的build.gradle,修改如下:

把原來的

externalNativeBuild {

cmake {

cppFlags "-std=c++11"

}

}

修改成,因爲我們只編譯了armeabi-v7a支持:

externalNativeBuild {

cmake {

cppFlags "-std=c++11 -fopenmp"

abiFilters "armeabi-v7a"

}

}

在android下加上:

sourceSets {

main {

jniLibs.srcDirs = ["src/main/jniLibs"]

jni.srcDirs = ['src/cpp']

}

}

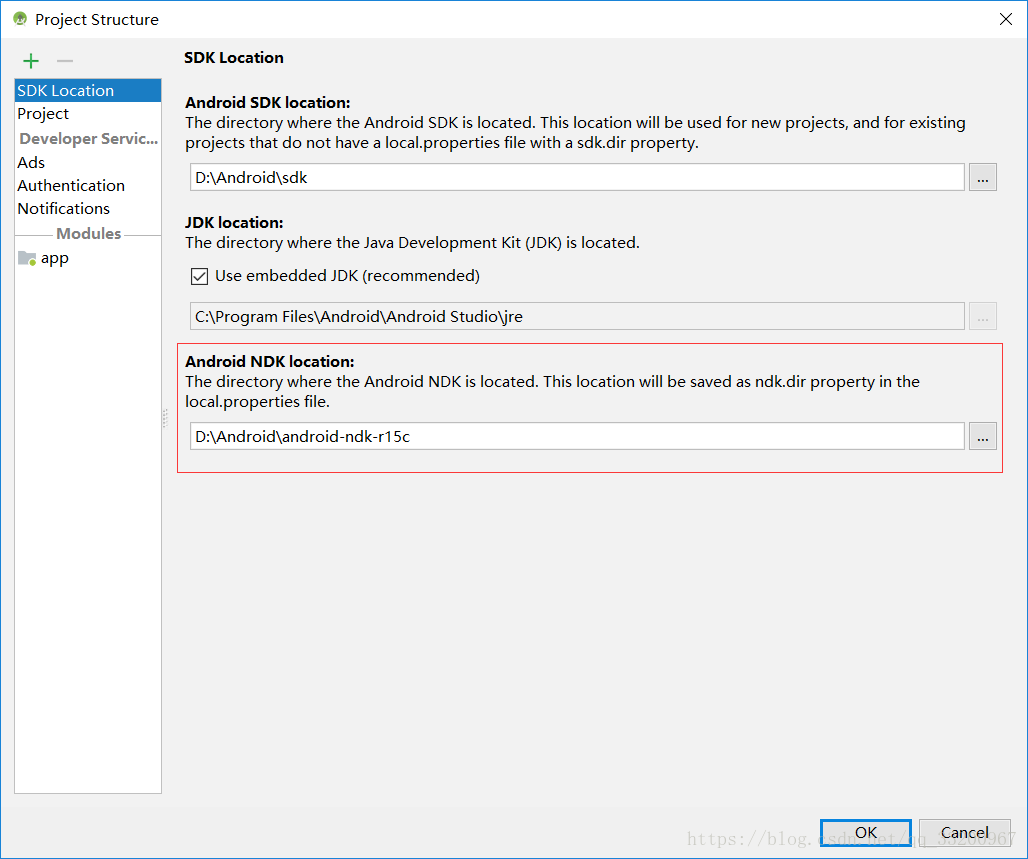

-

修改Android項目使用的NDK版本,我們編譯的時候是使用r15c,所以我們在Android項目上也要使用r15c,如下:

-

創建一個

com.xiaomi.mace包,並複製官方demo中的java類JniMaceUtils.java到該包中,代碼如下,這個就是使用mace的JNI接口:

package com.xiaomi.mace;

public class JniMaceUtils {

static {

System.loadLibrary("mace_mobile_jni");

}

// 設置模型屬性

public static native int maceMobilenetSetAttrs(int ompNumThreads, int cpuAffinityPolicy, int gpuPerfHint, int gpuPriorityHint, String kernelPath);

// 加載模型和選擇使用GPU或CPU

public static native int maceMobilenetCreateEngine(String model, String device);

// 預測圖片

public static native float[] maceMobilenetClassify(float[] input);

}

- 在項目的包下創建一個

InitData.java類,這個是配置mace的信息類,比如使用CPU還是GPU來預測,加載的是那個模型等等:

package com.example.myapplication;

import android.os.Environment;

import java.io.File;

public class InitData {

public static final String[] DEVICES = new String[]{"CPU", "GPU"};

public static final String[] MODELS = new String[]{"mobilenet_v1", "mobilenet_v2"};

private String model;

private String device = "";

private int ompNumThreads;

private int cpuAffinityPolicy;

private int gpuPerfHint;

private int gpuPriorityHint;

private String kernelPath = "";

public InitData() {

model = MODELS[1];

ompNumThreads = 4;

cpuAffinityPolicy = 0;

gpuPerfHint = 3;

gpuPriorityHint = 3;

device = DEVICES[0];

kernelPath = Environment.getExternalStorageDirectory().getAbsolutePath() + File.separator + "mace";

File file = new File(kernelPath);

if (!file.exists()) {

file.mkdir();

}

}

public String getModel() {

return model;

}

public void setModel(String model) {

this.model = model;

}

public String getDevice() {

return device;

}

public void setDevice(String device) {

this.device = device;

}

public int getOmpNumThreads() {

return ompNumThreads;

}

public void setOmpNumThreads(int ompNumThreads) {

this.ompNumThreads = ompNumThreads;

}

public int getCpuAffinityPolicy() {

return cpuAffinityPolicy;

}

public void setCpuAffinityPolicy(int cpuAffinityPolicy) {

this.cpuAffinityPolicy = cpuAffinityPolicy;

}

public int getGpuPerfHint() {

return gpuPerfHint;

}

public void setGpuPerfHint(int gpuPerfHint) {

this.gpuPerfHint = gpuPerfHint;

}

public int getGpuPriorityHint() {

return gpuPriorityHint;

}

public void setGpuPriorityHint(int gpuPriorityHint) {

this.gpuPriorityHint = gpuPriorityHint;

}

public String getKernelPath() {

return kernelPath;

}

public void setKernelPath(String kernelPath) {

this.kernelPath = kernelPath;

}

}

- 同樣是在項目的包下創建

PhotoUtil.java類,這是一個工具類,包括啓動相機獲拍攝圖片並返回該圖片的絕對路徑,還有一個是把圖片轉換成預測的數據,mace讀取的預測數據是一個float數組。

package com.example.myapplication;

import android.app.Activity;

import android.content.Context;

import android.content.Intent;

import android.database.Cursor;

import android.graphics.Bitmap;

import android.graphics.BitmapFactory;

import android.net.Uri;

import android.os.Build;

import android.provider.MediaStore;

import android.support.v4.content.FileProvider;

import java.io.File;

import java.io.IOException;

import java.nio.FloatBuffer;

public class PhotoUtil {

// start camera

public static Uri start_camera(Activity activity, int requestCode) {

Uri imageUri;

// save image in cache path

File outputImage = new File(activity.getExternalCacheDir(), "out_image.jpg");

try {

if (outputImage.exists()) {

outputImage.delete();

}

outputImage.createNewFile();

} catch (IOException e) {

e.printStackTrace();

}

if (Build.VERSION.SDK_INT >= 24) {

// compatible with Android 7.0 or over

imageUri = FileProvider.getUriForFile(activity,

"com.example.myapplication", outputImage);

} else {

imageUri = Uri.fromFile(outputImage);

}

// set system camera Action

Intent intent = new Intent(MediaStore.ACTION_IMAGE_CAPTURE);

// set save photo path

intent.putExtra(MediaStore.EXTRA_OUTPUT, imageUri);

// set photo quality, min is 0, max is 1

intent.putExtra(MediaStore.EXTRA_VIDEO_QUALITY, 0);

activity.startActivityForResult(intent, requestCode);

return imageUri;

}

// get picture in photo

public static void use_photo(Activity activity, int requestCode){

Intent intent = new Intent(Intent.ACTION_PICK);

intent.setType("image/*");

activity.startActivityForResult(intent, requestCode);

}

// get photo from Uri

public static String get_path_from_URI(Context context, Uri uri) {

String result;

Cursor cursor = context.getContentResolver().query(uri, null, null, null, null);

if (cursor == null) {

result = uri.getPath();

} else {

cursor.moveToFirst();

int idx = cursor.getColumnIndex(MediaStore.Images.ImageColumns.DATA);

result = cursor.getString(idx);

cursor.close();

}

return result;

}

// Compress the image to the size of the training image

public static float[] getScaledMatrix(Bitmap bitmap, int desWidth,

int desHeight) {

// create data buffer

float[] floatValues = new float[desWidth * desHeight * 3];

FloatBuffer floatBuffer = FloatBuffer.wrap(floatValues, 0, desWidth * desHeight * 3);

floatBuffer.rewind();

// get image pixel

int[] pixels = new int[desWidth * desHeight];

Bitmap bm = Bitmap.createScaledBitmap(bitmap, desWidth, desHeight, false);

bm.getPixels(pixels, 0, bm .getWidth(), 0, 0, desWidth, desHeight);

// pixel to data

for (int clr : pixels) {

floatBuffer.put((((clr >> 16) & 0xFF) - 128f) / 128f);

floatBuffer.put((((clr >> 8) & 0xFF) - 128f) / 128f);

floatBuffer.put(((clr & 0xFF) - 128f) / 128f);

}

if (bm.isRecycled()) {

bm.recycle();

}

return floatBuffer.array();

}

// compress picture

public static Bitmap getScaleBitmap(String filePath) {

BitmapFactory.Options opt = new BitmapFactory.Options();

opt.inJustDecodeBounds = true;

BitmapFactory.decodeFile(filePath, opt);

int bmpWidth = opt.outWidth;

int bmpHeight = opt.outHeight;

int maxSize = 500;

// compress picture with inSampleSize

opt.inSampleSize = 1;

while (true) {

if (bmpWidth / opt.inSampleSize < maxSize || bmpHeight / opt.inSampleSize < maxSize) {

break;

}

opt.inSampleSize *= 2;

}

opt.inJustDecodeBounds = false;

return BitmapFactory.decodeFile(filePath, opt);

}

}

- 修改

MainActivity.java,修改如下,主要是有兩個功能,第一個是打開相冊選擇圖片進行預測,另一個是啓動相機拍攝圖片進行預測。在進入應用是就調用init_model()方法來設置mace的配置信息和加載模型,其中可以通過調用load_model(String model)該更換模型。通過調用predict_image(String image_path)方法預測圖片並顯示結果:

package com.example.myapplication;

import android.Manifest;

import android.app.Activity;

import android.content.Intent;

import android.content.pm.PackageManager;

import android.content.res.AssetManager;

import android.graphics.Bitmap;

import android.net.Uri;

import android.os.Bundle;

import android.support.annotation.NonNull;

import android.support.annotation.Nullable;

import android.support.v4.app.ActivityCompat;

import android.support.v4.content.ContextCompat;

import android.support.v7.app.AppCompatActivity;

import android.text.method.ScrollingMovementMethod;

import android.util.Log;

import android.view.View;

import android.widget.Button;

import android.widget.ImageView;

import android.widget.TextView;

import android.widget.Toast;

import com.bumptech.glide.Glide;

import com.bumptech.glide.load.engine.DiskCacheStrategy;

import com.bumptech.glide.request.RequestOptions;

import com.xiaomi.mace.JniMaceUtils;

import java.io.BufferedReader;

import java.io.InputStreamReader;

import java.util.ArrayList;

import java.util.Arrays;

import java.util.List;

public class MainActivity extends AppCompatActivity {

private static final String TAG = MainActivity.class.getName();

private static final int USE_PHOTO = 1001;

private static final int START_CAMERA = 1002;

private Uri camera_image_path;

private ImageView show_image;

private TextView result_text;

private boolean load_result = false;

private int[] ddims = {1, 3, 224, 224};

private int model_index = 1;

private InitData initData = new InitData();

private List<String> resultLabel = new ArrayList<>();

private static final String[] PADDLE_MODEL = {

"mobilenet_v1",

"mobilenet_v2"

};

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

init_view();

init_model();

readCacheLabelFromLocalFile();

}

// initialize view

private void init_view() {

request_permissions();

show_image = (ImageView) findViewById(R.id.show_image);

result_text = (TextView) findViewById(R.id.result_text);

result_text.setMovementMethod(ScrollingMovementMethod.getInstance());

Button use_photo = (Button) findViewById(R.id.use_photo);

Button start_photo = (Button) findViewById(R.id.start_camera);

// use photo click

use_photo.setOnClickListener(new View.OnClickListener() {

@Override

public void onClick(View view) {

if (!load_result) {

Toast.makeText(MainActivity.this, "never load model", Toast.LENGTH_SHORT).show();

return;

}

PhotoUtil.use_photo(MainActivity.this, USE_PHOTO);

}

});

// start camera click

start_photo.setOnClickListener(new View.OnClickListener() {

@Override

public void onClick(View view) {

if (!load_result) {

Toast.makeText(MainActivity.this, "never load model", Toast.LENGTH_SHORT).show();

return;

}

camera_image_path = PhotoUtil.start_camera(MainActivity.this, START_CAMERA);

}

});

}

// init mace environment

private void init_model() {

int result = JniMaceUtils.maceMobilenetSetAttrs(

initData.getOmpNumThreads(), initData.getCpuAffinityPolicy(),

initData.getGpuPerfHint(), initData.getGpuPriorityHint(),

initData.getKernelPath());

Log.i(TAG, "maceMobilenetSetAttrs result = " + result);

load_model(PADDLE_MODEL[model_index]);

}

// load infer model

private void load_model(String model) {

// set will load model name

initData.setModel(model);

// load model

int result = JniMaceUtils.maceMobilenetCreateEngine(initData.getModel(), initData.getDevice());

Log.i(TAG, "maceMobilenetCreateEngine result = " + result);

// set load model result

load_result = result == 0;

if (load_result) {

Toast.makeText(MainActivity.this, model + " model load success", Toast.LENGTH_SHORT).show();

Log.d(TAG, model + " model load success");

} else {

Toast.makeText(MainActivity.this, model + " model load fail", Toast.LENGTH_SHORT).show();

Log.d(TAG, model + " model load fail");

}

}

private void readCacheLabelFromLocalFile() {

try {

AssetManager assetManager = getApplicationContext().getAssets();

BufferedReader reader = new BufferedReader(new InputStreamReader(assetManager.open("cacheLabel.txt")));

String readLine = null;

while ((readLine = reader.readLine()) != null) {

resultLabel.add(readLine);

}

reader.close();

} catch (Exception e) {

Log.e("labelCache", "error " + e);

}

}

@Override

protected void onActivityResult(int requestCode, int resultCode, @Nullable Intent data) {

String image_path;

RequestOptions options = new RequestOptions().skipMemoryCache(true).diskCacheStrategy(DiskCacheStrategy.NONE);

if (resultCode == Activity.RESULT_OK) {

switch (requestCode) {

case USE_PHOTO:

if (data == null) {

Log.w(TAG, "user photo data is null");

return;

}

Uri image_uri = data.getData();

Glide.with(MainActivity.this).load(image_uri).apply(options).into(show_image);

// get image path from uri

image_path = PhotoUtil.get_path_from_URI(MainActivity.this, image_uri);

// predict image

predict_image(image_path);

break;

case START_CAMERA:

// show photo

Glide.with(MainActivity.this).load(camera_image_path).apply(options).into(show_image);

image_path = PhotoUtil.get_path_from_URI(MainActivity.this, camera_image_path);

// predict image

predict_image(image_path);

break;

}

}

}

// predict image

private void predict_image(String image_path) {

// picture to float array

Bitmap bmp = PhotoUtil.getScaleBitmap(image_path);

float[] inputData = PhotoUtil.getScaledMatrix(bmp, ddims[2], ddims[3]);

try {

// Data format conversion takes too long

// Log.d("inputData", Arrays.toString(inputData));

long start = System.currentTimeMillis();

// get predict result

float[] result = JniMaceUtils.maceMobilenetClassify(inputData);

long end = System.currentTimeMillis();

Log.d(TAG, "origin predict result:" + Arrays.toString(result));

long time = end - start;

Log.d("result length", String.valueOf(result.length));

// show predict result and time

int r = get_max_result(result);

String show_text = "result:" + r + "\nname:" + resultLabel.get(r) + "\nprobability:" + result[r] + "\ntime:" + time + "ms";

result_text.setText(show_text);

} catch (Exception e) {

e.printStackTrace();

}

}

// get max probability label

private int get_max_result(float[] result) {

float probability = result[0];

int r = 0;

for (int i = 0; i < result.length; i++) {

if (probability < result[i]) {

probability = result[i];

r = i;

}

}

return r;

}

// request permissions

private void request_permissions() {

List<String> permissionList = new ArrayList<>();

if (ContextCompat.checkSelfPermission(this, Manifest.permission.CAMERA) != PackageManager.PERMISSION_GRANTED) {

permissionList.add(Manifest.permission.CAMERA);

}

if (ContextCompat.checkSelfPermission(this, Manifest.permission.WRITE_EXTERNAL_STORAGE) != PackageManager.PERMISSION_GRANTED) {

permissionList.add(Manifest.permission.WRITE_EXTERNAL_STORAGE);

}

if (ContextCompat.checkSelfPermission(this, Manifest.permission.READ_EXTERNAL_STORAGE) != PackageManager.PERMISSION_GRANTED) {

permissionList.add(Manifest.permission.READ_EXTERNAL_STORAGE);

}

// if list is not empty will request permissions

if (!permissionList.isEmpty()) {

ActivityCompat.requestPermissions(this, permissionList.toArray(new String[permissionList.size()]), 1);

}

}

@Override

public void onRequestPermissionsResult(int requestCode, @NonNull String[] permissions, @NonNull int[] grantResults) {

super.onRequestPermissionsResult(requestCode, permissions, grantResults);

switch (requestCode) {

case 1:

if (grantResults.length > 0) {

for (int i = 0; i < grantResults.length; i++) {

int grantResult = grantResults[i];

if (grantResult == PackageManager.PERMISSION_DENIED) {

String s = permissions[i];

Toast.makeText(this, s + " permission was denied", Toast.LENGTH_SHORT).show();

}

}

}

break;

}

}

}

-

在

main下創建一個asset目錄並加入這個文件 -

最後別忘了在配置文件

AndroidManifest.xml上加上權限

<uses-permission android:name="android.permission.CAMERA"/>

<uses-permission android:name="android.permission.READ_EXTERNAL_STORAGE"/>

<uses-permission android:name="android.permission.WRITE_EXTERNAL_STORAGE"/>

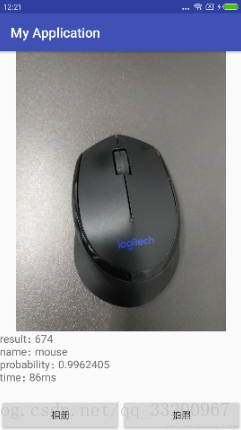

最後運行得到的結果如下圖:

注意:該項目對Android7.0相機兼容不是很好。

源碼下載: 上面已經是全部代碼了,如果讀者想更方便使用,可以直接下載該項目

參考資料¶

- https://github.com/XiaoMi/mace

- https://mace.readthedocs.io/en/latest/