Preface¶

In the previous article “Deploying the ERNIE 4.5 Open-Source Model for Android Device Calls” (/article/1751684959287), the author introduced how to deploy the ERNIE 4.5 open-source large language model on one’s own server. However, for those without access to a GPU server, this solution might be unattainable. Therefore, this article will guide you on how to “leverage” the computing power available on AiStudio to deploy the ERNIE 4.5 open-source model for personal use.

Deploying the ERNIE 4.5 Model¶

- Register on AiStudio: Instructions for registration are not provided here; please refer to the link https://aistudio.baidu.com/overview for registration.

- Navigate to AiStudio’s Home Page: On the left side of the homepage, you’ll find a

Deploymententry, as shown in the screenshot below.

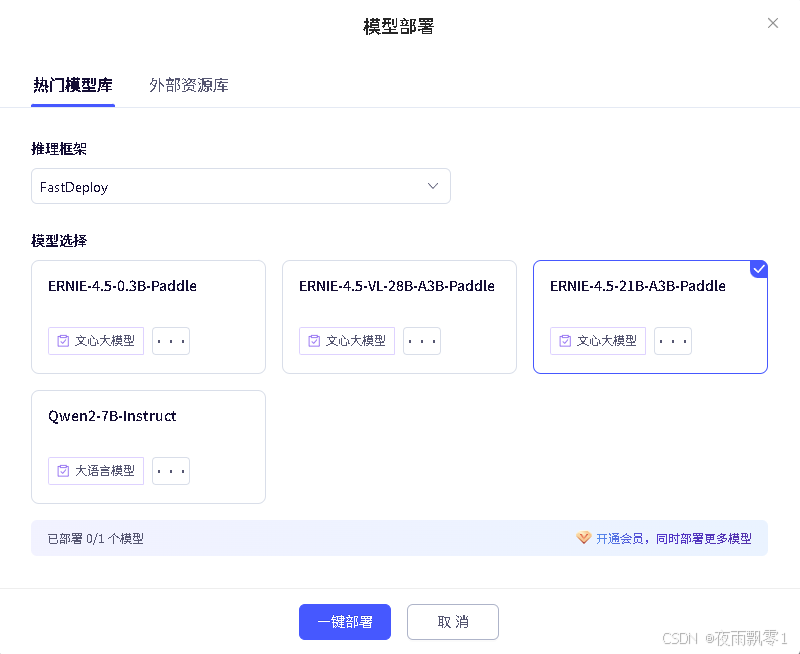

- Create a New Deployment: Select FastDeploy for deployment. You’ll see several available models; choose

ERNIE-4.5-21B-A3B-Paddle(matching the model from the previous article).

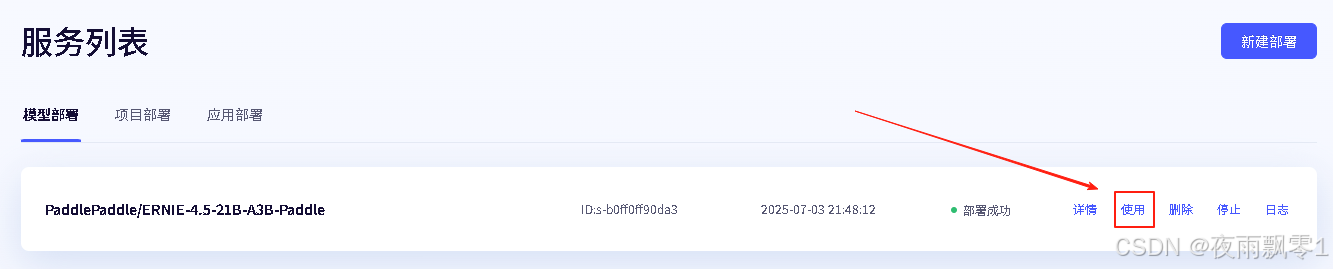

- View Deployment Details: After deployment completes, click “Use” to view the usage example.

- Extract API Information: The usage example includes critical parameters:

api_keyandbase_url. Notably, the model supports the OpenAI API format, simplifying integration.

The ERNIE 4.5 model is now deployed. Next, we’ll set up a relay service.

Setting Up Your Own Relay Service¶

Compared to the previous article, only two parameters need modification: base_url and api_key in the openai.Client configuration. No other changes are required.

import openai

import uuid

import json

from typing import Dict

class LLM:

def __init__(self, host, port):

self.client = openai.Client(

base_url=f"https://api-******************.aistudio-app.com/v1",

api_key="*************************16"

)

self.system_prompt = {"role": "system", "content": "You are a helpful assistant."}

self.histories: Dict[str, list] = {}

self.base_prompt = {"role": "user", "content": "Please act as an AI assistant named ERNIE 4.5."}

self.base_prompt_res = {"role": "assistant", "content": "Okay, I've remembered. What questions do you have for me?"}

# Streaming Response

def generate_stream(self, prompt, max_length=8192, top_p=0.8, temperature=0.95, session_id=None):

if session_id and session_id in self.histories:

history = self.histories[session_id]

else:

session_id = str(uuid.uuid4()).replace('-', '')

history = [self.system_prompt, self.base_prompt, self.base_prompt_res]

history.append({"role": "user", "content": prompt})

print(f"History: {history}")

print("=" * 70)

print(f"【User Query】: {prompt}")

all_output = ""

response = self.client.chat.completions.create(

model="null",

messages=history,

max_tokens=max_length,

temperature=temperature,

top_p=top_p,

stream=True

)

for chunk in response:

if chunk.choices[0].delta:

output = chunk.choices[0].delta.content

if output == "": continue

ret = {"response": output, "code": 0, "session_id": session_id}

all_output += output

history[-1] = {"role": "assistant", "content": all_output}

self.histories[session_id] = history

yield json.dumps(ret).encode() + b"\0"

Android Integration¶

The Android code remains identical to the previous article with no modifications needed. The core code in Android is as follows, where CHAT_HOST should be set to the IP address and port of your deployed relay service (e.g., http://192.168.1.100:8000).

// Send text to the LLM API

private void sendChat(String text) {

if (text.isEmpty()) {

return;

}

runOnUiThread(() -> sendBtn.setEnabled(false));

Map<String, String> params = new HashMap<>();

params.put("prompt", text);

if (session_id != null) {

params.put("session_id", session_id);

}

JSONObject jsonBody = new JSONObject(params);

try {

jsonBody.put("top_p", 0.8);

jsonBody.put("temperature", 0.95);

} catch (JSONException e) {

throw new RuntimeException(e);

}

RequestBody requestBody = RequestBody.create(

jsonBody.toString(),

MediaType.parse("application/json; charset=utf-8")

);

Request request = new Request.Builder()

.url(CHAT_HOST + "/llm")

.post(requestBody)

.build();

OkHttpClient client = new OkHttpClient.Builder()

.connectTimeout(30, TimeUnit.SECONDS)

.readTimeout(30, TimeUnit.SECONDS)

.build();

try {

Response response = client.newCall(request).execute();

ResponseBody body = response.body();

if (body == null) {

return;

}

InputStream inputStream = body.byteStream();

byte[] buffer = new byte[2048];

int len;

StringBuilder sb = new StringBuilder();

StringBuilder allResponse = new StringBuilder();

while ((len = inputStream.read(buffer)) != -1) {

try {

String data = new String(buffer, 0, len - 1, StandardCharsets.UTF_8);

sb.append(data);

if (buffer[len - 2] != 0x7d) {

continue;

}

data = sb.toString();

sb = new StringBuilder();

Log.d(TAG, data);

JSONObject result = new JSONObject(data);

int code = result.getInt("code");

String resp = result.getString("response");

allResponse.append(resp);

session_id = result.getString("session_id");

runOnUiThread(() -> {

if (mMsgList.size() > 0 && mMsgList.get(mMsgList.size() - 1).getType() == Msg.TYPE_RECEIVED) {

mMsgList.get(mMsgList.size() - 1).setContent(allResponse.toString());

mAdapter.notifyItemChanged(mMsgList.size() - 1);

} else {

mMsgList.add(new Msg(resp, Msg.TYPE_RECEIVED));

mAdapter.notifyItemInserted(mMsgList.size() - 1);

}

mRecyclerView.scrollToPosition(mMsgList.size() - 1);

});

} catch (JSONException e) {

e.printStackTrace();

}

}

inputStream.close();

response.close();

} catch (IOException e) {

e.printStackTrace();

} finally {

runOnUiThread(() -> sendBtn.setEnabled(true));

}

}

Effect Preview:

Obtaining the Source Code¶

To obtain the complete source code, please reply to the official account with the keyword “Deploying ERNIE 4.5 Open-Source Model for Android Device Calls”.