Introduction¶

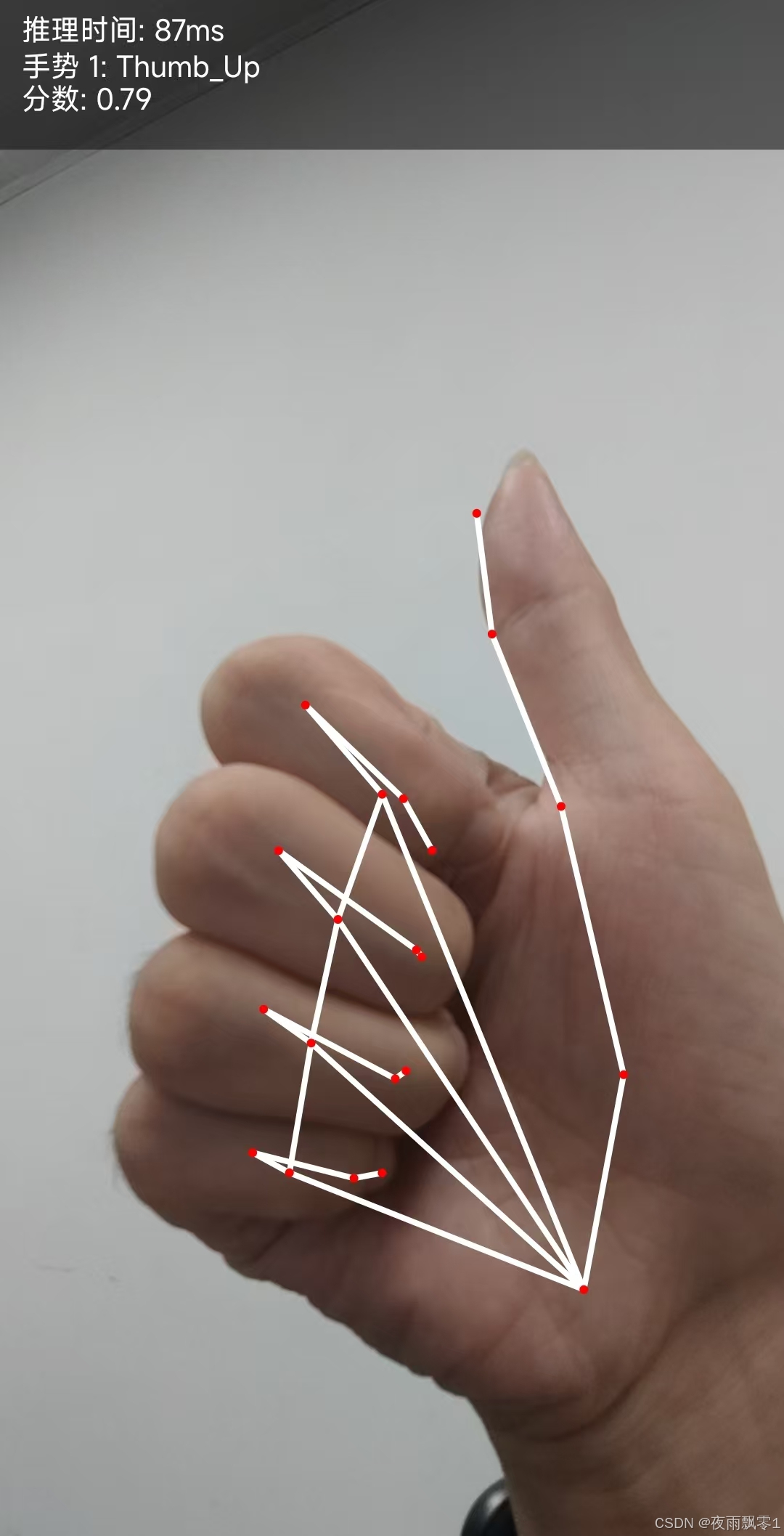

This project implements a high-performance real-time gesture recognition Android application based on Google MediaPipe and Android CameraX technology stack. It uses MediaPipe’s latest Gesture Recognition API to support multiple gesture types, including common gestures like Thumb Up, Victory, and Open Palm. The application also features real-time hand key point detection and drawing functionality. It uses the front camera for real-time gesture recognition, accurately detecting and classifying hand gestures while displaying recognition results and confidence scores on the interface.

This project is not only suitable for research and development related to gesture recognition but also serves as a practical reference for MediaPipe applications on the Android platform. It holds high reference value for developers interested in learning computer vision, gesture recognition, and Android Camera development.

Project Features¶

- Real-time Gesture Recognition: Based on the MediaPipe Gesture Recognition model, supporting 7 common gesture types

- Hand Key Point Detection: Real-time detection and drawing of 21 hand key points and skeleton connections

- Multi-hand Detection: Supports simultaneous detection of multiple hands (customizable quantity)

- High-performance Inference: Uses CPU inference to ensure compatibility and stability across various devices

- Real-time Drawing: Custom OverlayView implementation for real-time drawing of hand key points and recognition results

- Camera Integration: Camera preview and image analysis implemented using CameraX

- Permission Management: Complete camera permission application and processing mechanism

Technical Architecture:

- MediaPipe: Google’s open-source machine learning framework providing efficient gesture recognition capabilities

- CameraX: Android Jetpack camera library simplifying camera operations and lifecycle management

- Custom Drawing: Canvas-based real-time drawing system supporting visualization of hand key points and recognition results

- Multi-threaded Processing: Background thread for image analysis to avoid UI thread blocking

Hand Gestures Supported by Official Model¶

The MediaPipe official gesture recognition model supports the following 7 gestures:

| Gesture Name | English Name | Description | Application Scenarios |

|---|---|---|---|

| 👍 | Thumb_Up | Thumb Up | Like, Confirm |

| 👎 | Thumb_Down | Downward Thumb | Disapprove, Negate |

| ✌️ | Victory | Victory Gesture | Taking photos, Victory expression |

| 🤟 | ILoveYou | I Love You Gesture | Expressing emotions |

| ✊ | Closed_Fist | Fist | Grab, Confirm |

| ✋ | Open_Palm | Open Palm | Stop, Display |

| 👉 | Pointing_Up | Pointing Up | Indicate, Select |

Using Custom Model¶

Refer to my previous article “Training Custom Gesture Recognition Models with MediaPipe” to train your custom model, obtain the custom_gesture_recognizer.task model file, place it in the assets/ directory, and modify the MODEL_FILE parameter in GestureRecognizerHelper.

Project Introduction¶

Here are the core code paths of the project:

app/src/main/java/com/yeyupiaoling/gesturerecognizer/

├── MainActivity.java # Main activity class, responsible for camera integration and lifecycle management

├── GestureRecognizerHelper.java # MediaPipe gesture recognition encapsulation class, core recognition logic

└── OverlayView.java # Custom drawing view for hand key points and result drawing

app/src/main/assets/

└── gesture_recognizer.task # MediaPipe pre-trained model file

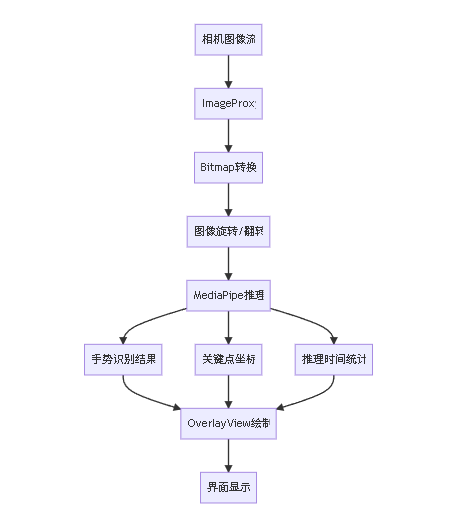

- Below is a simple data flow diagram of the project

- The following are core project dependencies configured in

app/build.gradle:

dependencies {

// MediaPipe gesture recognition

implementation 'com.google.mediapipe:tasks-vision:0.10.14'

// CameraX dependencies

implementation 'androidx.camera:camera-core:1.3.4'

implementation 'androidx.camera:camera-camera2:1.3.4'

implementation 'androidx.camera:camera-lifecycle:1.3.4'

implementation 'androidx.camera:camera-view:1.3.4'

}

- Core parameters that can be adjusted are configured in

GestureRecognizerHelper.java:

// Hand detection confidence (0.0-1.0)

private final float minHandDetectionConfidence = 0.5f;

// Hand tracking confidence (0.0-1.0)

private final float minHandTrackingConfidence = 0.5f;

// Hand presence confidence (0.0-1.0)

private final float minHandPresenceConfidence = 0.5f;

// Maximum number of detected hands (1-2)

private final int maxNumHands = 2;

Demonstration Screenshot¶

Get Source Code¶

Reply to the official account with “Android Project Source Code for Gesture Recognition” to obtain the source code.